Multi-Armed Bandits with Correlated Arms

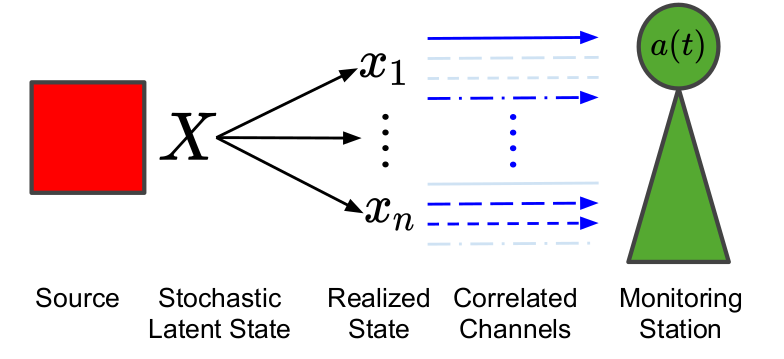

We consider a multi-armed bandit framework where the rewards obtained by pulling different arms are correlated. We develop a unified approach to leverage these reward correlations and present fundamental generalizations of classic bandit algorithms to the correlated setting. We present a unified proof technique to analyze the proposed algorithms. Rigorous analysis of C-UCB (the correlated bandit version of Upper-confidence-bound) reveals that the algorithm ends up pulling certain sub-optimal arms, termed as non-competitive, only O(1) times, as opposed to the O(log T) pulls required by classic bandit algorithms such as UCB, TS etc. We present regret-lower bound and show that when arms are correlated through a latent random source, our algorithms obtain order-optimal regret. We validate the proposed algorithms via experiments on the MovieLens and Goodreads datasets, and show significant improvement over classical bandit algorithms.

PDF Abstract

MovieLens

MovieLens