MMKG: Multi-Modal Knowledge Graphs

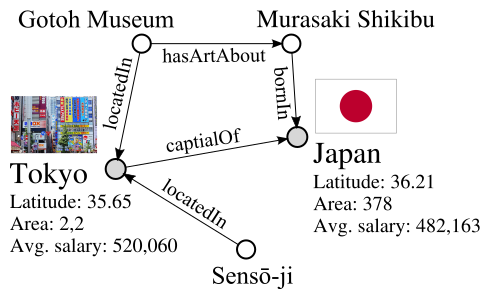

We present MMKG, a collection of three knowledge graphs that contain both numerical features and (links to) images for all entities as well as entity alignments between pairs of KGs. Therefore, multi-relational link prediction and entity matching communities can benefit from this resource. We believe this data set has the potential to facilitate the development of novel multi-modal learning approaches for knowledge graphs.We validate the utility ofMMKG in the sameAs link prediction task with an extensive set of experiments. These experiments show that the task at hand benefits from learning of multiple feature types.

PDF Abstract

FB15k

FB15k

DBpedia

DBpedia

YAGO

YAGO