MixFaceNets: Extremely Efficient Face Recognition Networks

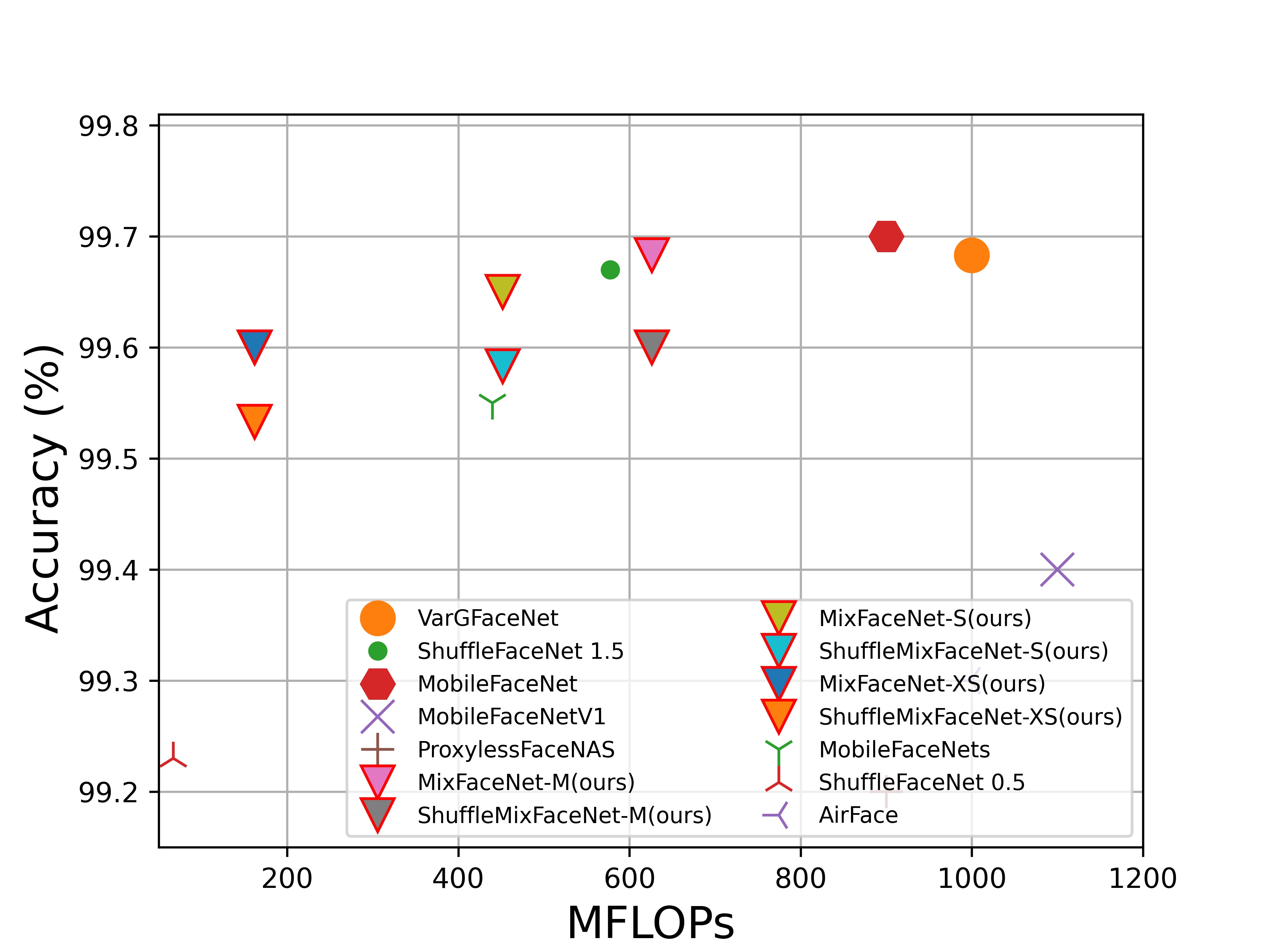

In this paper, we present a set of extremely efficient and high throughput models for accurate face verification, MixFaceNets which are inspired by Mixed Depthwise Convolutional Kernels. Extensive experiment evaluations on Label Face in the Wild (LFW), Age-DB, MegaFace, and IARPA Janus Benchmarks IJB-B and IJB-C datasets have shown the effectiveness of our MixFaceNets for applications requiring extremely low computational complexity. Under the same level of computation complexity (< 500M FLOPs), our MixFaceNets outperform MobileFaceNets on all the evaluated datasets, achieving 99.60% accuracy on LFW, 97.05% accuracy on AgeDB-30, 93.60 TAR (at FAR1e-6) on MegaFace, 90.94 TAR (at FAR1e-4) on IJB-B and 93.08 TAR (at FAR1e-4) on IJB-C. With computational complexity between 500M and 1G FLOPs, our MixFaceNets achieved results comparable to the top-ranked models, while using significantly fewer FLOPs and less computation overhead, which proves the practical value of our proposed MixFaceNets. All training codes, pre-trained models, and training logs have been made available https://github.com/fdbtrs/mixfacenets.

PDF Abstract

LFW

LFW

IJB-C

IJB-C

MegaFace

MegaFace

IJB-B

IJB-B