MELD: A Multimodal Multi-Party Dataset for Emotion Recognition in Conversations

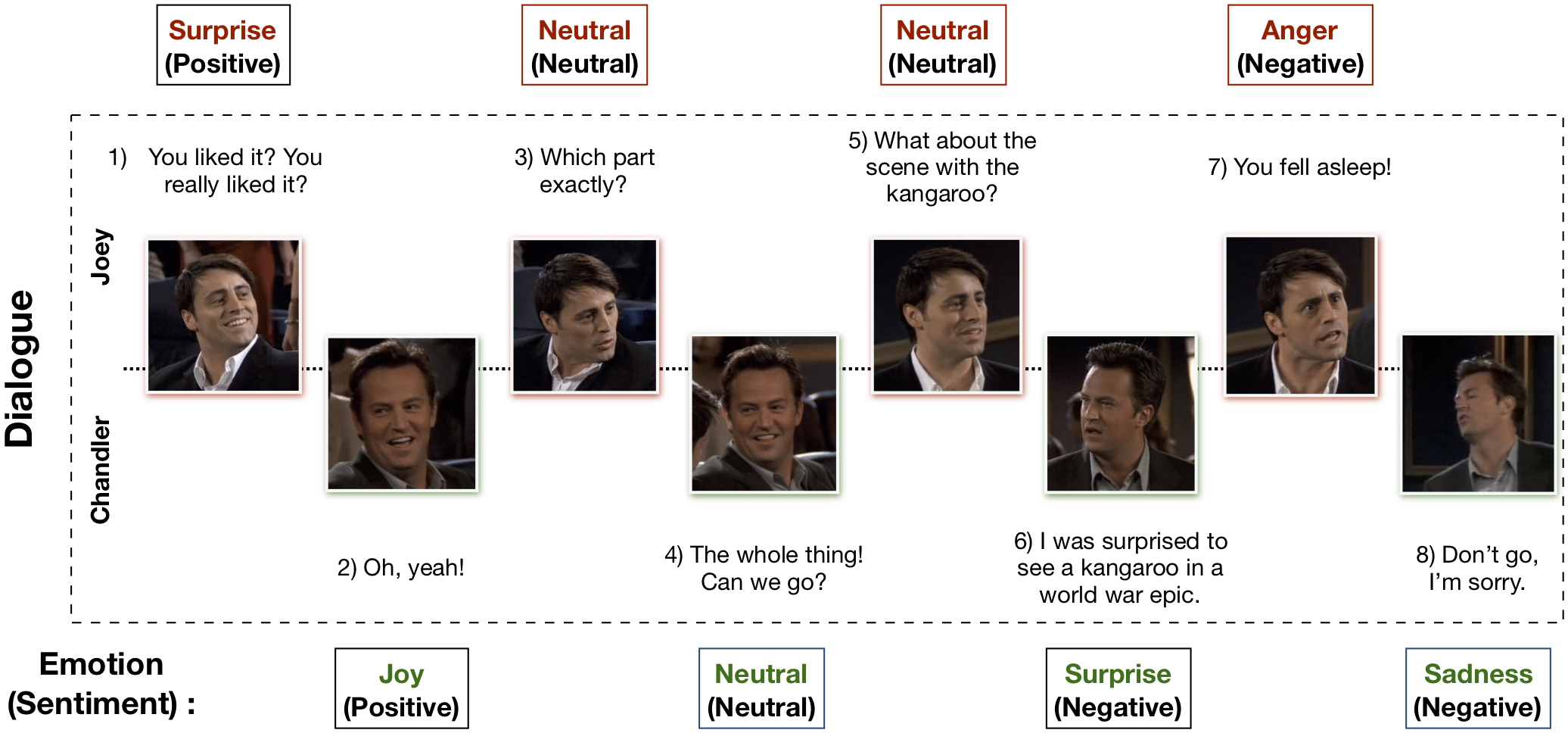

Emotion recognition in conversations is a challenging task that has recently gained popularity due to its potential applications. Until now, however, a large-scale multimodal multi-party emotional conversational database containing more than two speakers per dialogue was missing. Thus, we propose the Multimodal EmotionLines Dataset (MELD), an extension and enhancement of EmotionLines. MELD contains about 13,000 utterances from 1,433 dialogues from the TV-series Friends. Each utterance is annotated with emotion and sentiment labels, and encompasses audio, visual and textual modalities. We propose several strong multimodal baselines and show the importance of contextual and multimodal information for emotion recognition in conversations. The full dataset is available for use at http:// affective-meld.github.io.

PDF Abstract ACL 2019 PDF ACL 2019 Abstract

MELD

MELD

IEMOCAP

IEMOCAP

SEMAINE

SEMAINE

EmotionLines

EmotionLines