Mask Conditional Synthetic Satellite Imagery

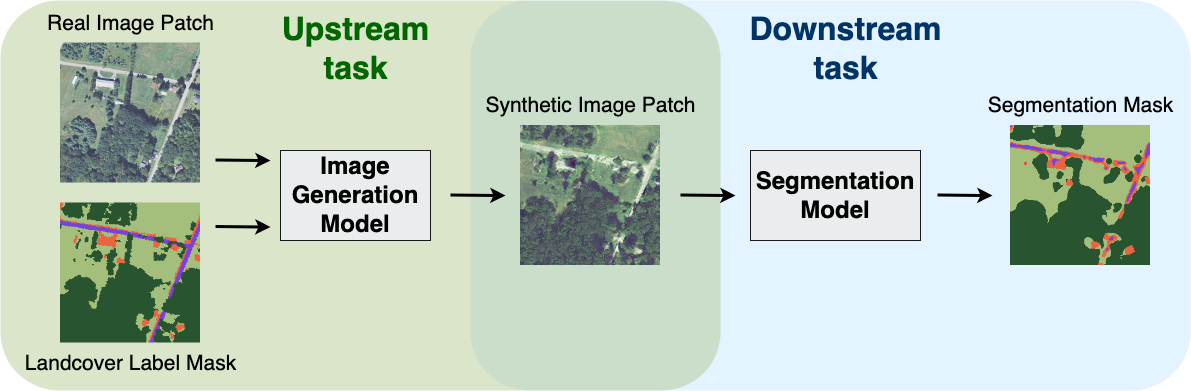

In this paper we propose a mask-conditional synthetic image generation model for creating synthetic satellite imagery datasets. Given a dataset of real high-resolution images and accompanying land cover masks, we show that it is possible to train an upstream conditional synthetic imagery generator, use that generator to create synthetic imagery with the land cover masks, then train a downstream model on the synthetic imagery and land cover masks that achieves similar test performance to a model that was trained with the real imagery. Further, we find that incorporating a mixture of real and synthetic imagery acts as a data augmentation method, producing better models than using only real imagery (0.5834 vs. 0.5235 mIoU). Finally, we find that encouraging diversity of outputs in the upstream model is a necessary component for improved downstream task performance. We have released code for reproducing our work on GitHub, see https://github.com/ms-synthetic-satellite-image/synthetic-satellite-imagery .

PDF Abstract