Towards Better Explanations of Class Activation Mapping

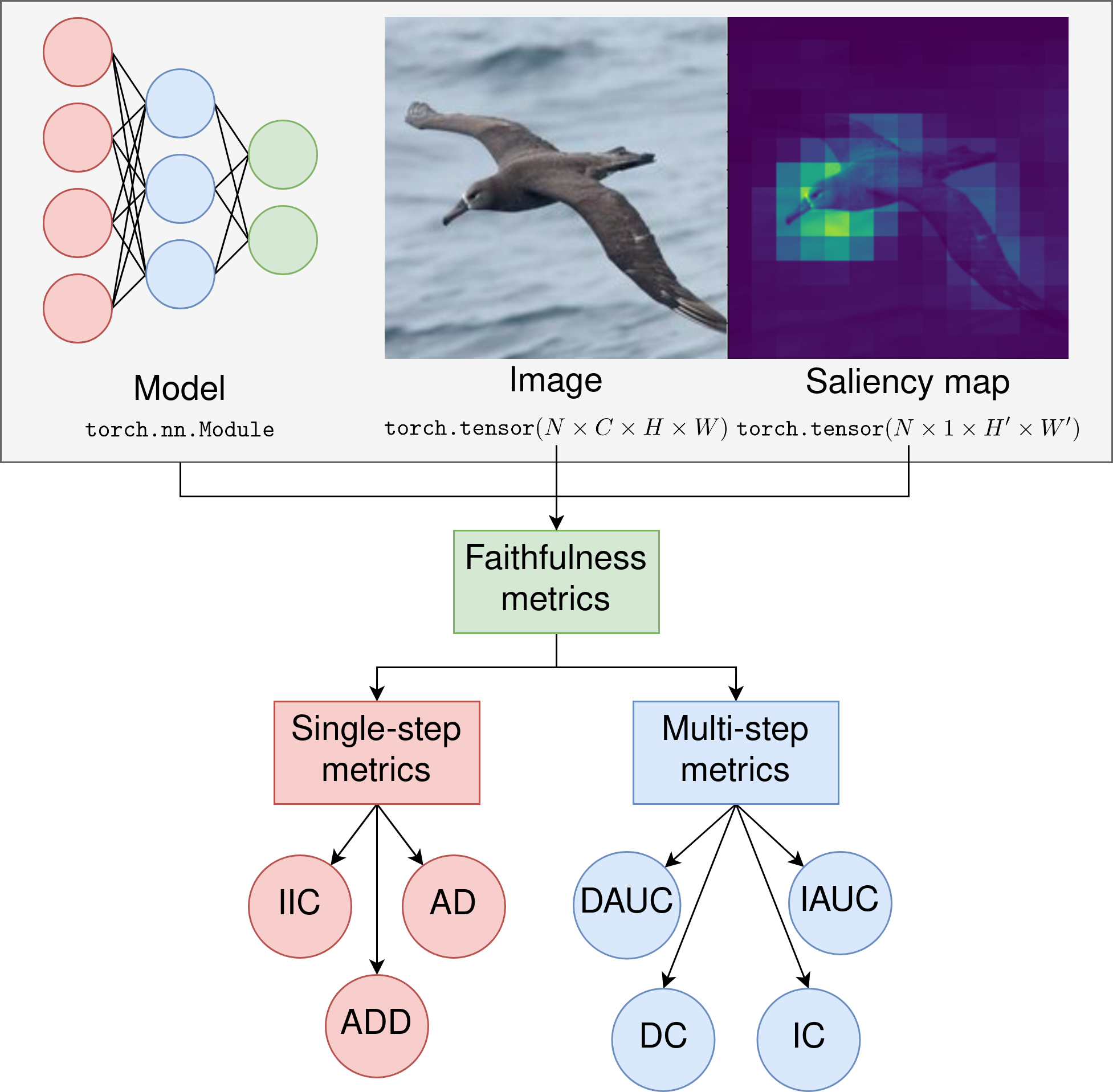

Increasing demands for understanding the internal behavior of convolutional neural networks (CNNs) have led to remarkable improvements in explanation methods. Particularly, several class activation mapping (CAM) based methods, which generate visual explanation maps by a linear combination of activation maps from CNNs, have been proposed. However, the majority of the methods lack a clear theoretical basis on how they assign the coefficients of the linear combination. In this paper, we revisit the intrinsic linearity of CAM with respect to the activation maps; we construct an explanation model of CNN as a linear function of binary variables that denote the existence of the corresponding activation maps. With this approach, the explanation model can be determined by additive feature attribution methods in an analytic manner. We then demonstrate the adequacy of SHAP values, which is a unique solution for the explanation model with a set of desirable properties, as the coefficients of CAM. Since the exact SHAP values are unattainable, we introduce an efficient approximation method, LIFT-CAM, based on DeepLIFT. Our proposed LIFT-CAM can estimate the SHAP values of the activation maps with high speed and accuracy. Furthermore, it greatly outperforms other previous CAM-based methods in both qualitative and quantitative aspects.

PDF Abstract ICCV 2021 PDF ICCV 2021 Abstract

MS COCO

MS COCO

Visual Question Answering

Visual Question Answering