Level Set Teleportation: An Optimization Perspective

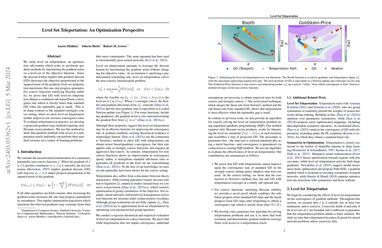

We study level set teleportation, an optimization sub-routine which seeks to accelerate gradient methods by maximizing the gradient norm on a level-set of the objective function. Since the descent lemma implies that gradient descent (GD) decreases the objective proportional to the squared norm of the gradient, level-set teleportation maximizes this one-step progress guarantee. For convex functions satisfying Hessian stability, we prove that GD with level-set teleportation obtains a combined sub-linear/linear convergence rate which is strictly faster than standard GD when the optimality gap is small. This is in sharp contrast to the standard (strongly) convex setting, where we show level-set teleportation neither improves nor worsens convergence rates. To evaluate teleportation in practice, we develop a projected-gradient-type method requiring only Hessian-vector products. We use this method to show that gradient methods with access to a teleportation oracle uniformly out-perform their standard versions on a variety of learning problems.

PDF Abstract