Learning Transferable Visual Models From Natural Language Supervision

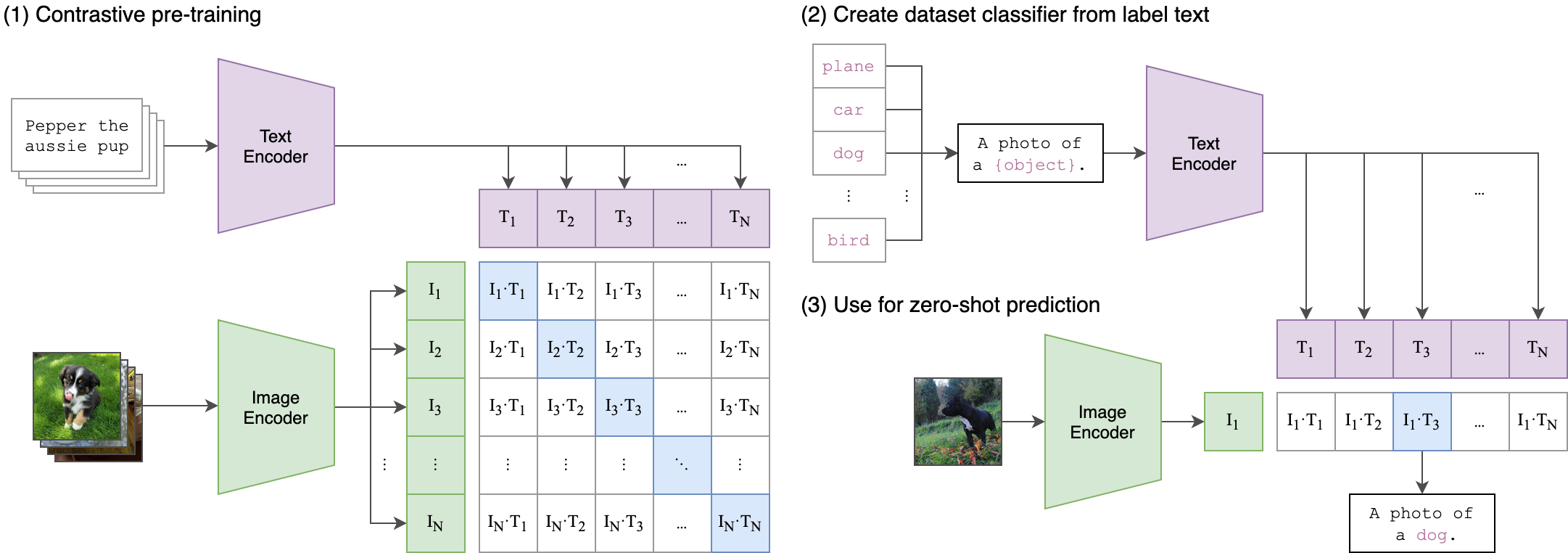

State-of-the-art computer vision systems are trained to predict a fixed set of predetermined object categories. This restricted form of supervision limits their generality and usability since additional labeled data is needed to specify any other visual concept. Learning directly from raw text about images is a promising alternative which leverages a much broader source of supervision. We demonstrate that the simple pre-training task of predicting which caption goes with which image is an efficient and scalable way to learn SOTA image representations from scratch on a dataset of 400 million (image, text) pairs collected from the internet. After pre-training, natural language is used to reference learned visual concepts (or describe new ones) enabling zero-shot transfer of the model to downstream tasks. We study the performance of this approach by benchmarking on over 30 different existing computer vision datasets, spanning tasks such as OCR, action recognition in videos, geo-localization, and many types of fine-grained object classification. The model transfers non-trivially to most tasks and is often competitive with a fully supervised baseline without the need for any dataset specific training. For instance, we match the accuracy of the original ResNet-50 on ImageNet zero-shot without needing to use any of the 1.28 million training examples it was trained on. We release our code and pre-trained model weights at https://github.com/OpenAI/CLIP.

PDF AbstractCode

Tasks

Action Recognition

Action Recognition

Benchmarking

Benchmarking

Few-Shot Image Classification

Few-Shot Image Classification

Image Classification

Image Classification

Long-tail Learning

Long-tail Learning

Meme Classification

Meme Classification

Natural Language Understanding

Natural Language Understanding

Object Categorization

Object Categorization

Open Vocabulary Attribute Detection

Open Vocabulary Attribute Detection

Out-of-Distribution Generalization

Out-of-Distribution Generalization

Prompt Engineering

Prompt Engineering

Semi-Supervised Image Classification

Semi-Supervised Image Classification

Text Generation

Text Generation

Visual Reasoning

Visual Reasoning

Zero-Shot Cross-Modal Retrieval

Zero-Shot Cross-Modal Retrieval

Zero-Shot Learning

Zero-Shot Learning

Zero-Shot Transfer Image Classification

Zero-Shot Transfer Image Classification

Datasets

ImageNet

ImageNet

MS COCO

MS COCO

KITTI

KITTI

GLUE

GLUE

SST

SST

UCF101

SST-2

UCF101

SST-2

Visual Genome

Visual Genome

Oxford 102 Flower

Oxford 102 Flower

Flickr30k

Flickr30k

Stanford Cars

Stanford Cars

DTD

DTD

Caltech-101

Caltech-101

EuroSAT

EuroSAT

FGVC-Aircraft

FGVC-Aircraft

ImageNet-R

ImageNet-R

WebText

WebText

ImageNet-A

ImageNet-A

YFCC100M

YFCC100M

ImageNet-Sketch

FairFace

ImageNet-Sketch

FairFace

Hateful Memes

Hateful Memes

FER2013

FER2013

ObjectNet

ObjectNet

RESISC45

RESISC45

Kinetics-700

Kinetics-700

PCam

PCam

Oxford-IIIT Pet Dataset

Oxford-IIIT Pets

Oxford-IIIT Pet Dataset

Oxford-IIIT Pets

SUN397

SUN397

ImageNet-S

ImageNet-S

OmniBenchmark

SUN

OmniBenchmark

SUN

ImageNet-W

ImageNet-W

OVAD benchmark

OVAD benchmark

GRIT

VOC-MLT

COCO-MLT

GRIT

VOC-MLT

COCO-MLT

RareAct

RareAct

Results from the Paper

Ranked #1 on

Zero-Shot Learning

on COCO-MLT

(using extra training data)

Ranked #1 on

Zero-Shot Learning

on COCO-MLT

(using extra training data)