Learning to Combine: Knowledge Aggregation for Multi-Source Domain Adaptation

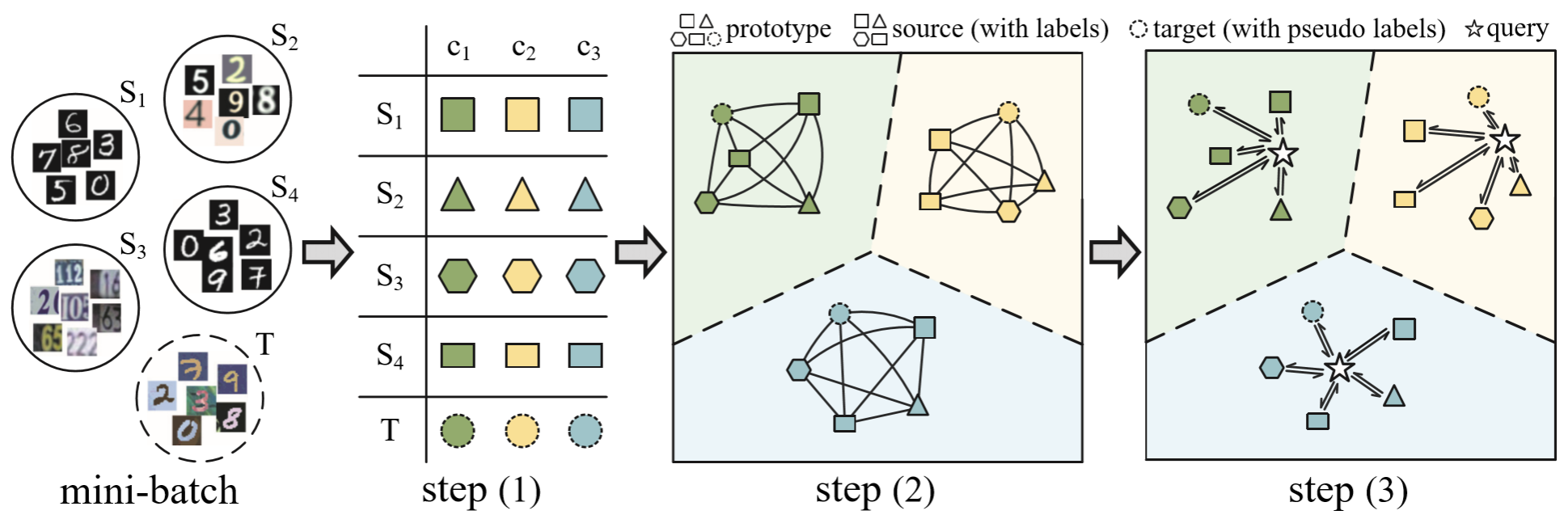

Transferring knowledges learned from multiple source domains to target domain is a more practical and challenging task than conventional single-source domain adaptation. Furthermore, the increase of modalities brings more difficulty in aligning feature distributions among multiple domains. To mitigate these problems, we propose a Learning to Combine for Multi-Source Domain Adaptation (LtC-MSDA) framework via exploring interactions among domains. In the nutshell, a knowledge graph is constructed on the prototypes of various domains to realize the information propagation among semantically adjacent representations. On such basis, a graph model is learned to predict query samples under the guidance of correlated prototypes. In addition, we design a Relation Alignment Loss (RAL) to facilitate the consistency of categories' relational interdependency and the compactness of features, which boosts features' intra-class invariance and inter-class separability. Comprehensive results on public benchmark datasets demonstrate that our approach outperforms existing methods with a remarkable margin. Our code is available at \url{https://github.com/ChrisAllenMing/LtC-MSDA}

PDF Abstract ECCV 2020 PDF ECCV 2020 Abstract

MNIST

MNIST

SVHN

SVHN

DomainNet

DomainNet

Office-31

Office-31

USPS

USPS

MNIST-M

MNIST-M