Learning Garment DensePose for Robust Warping in Virtual Try-On

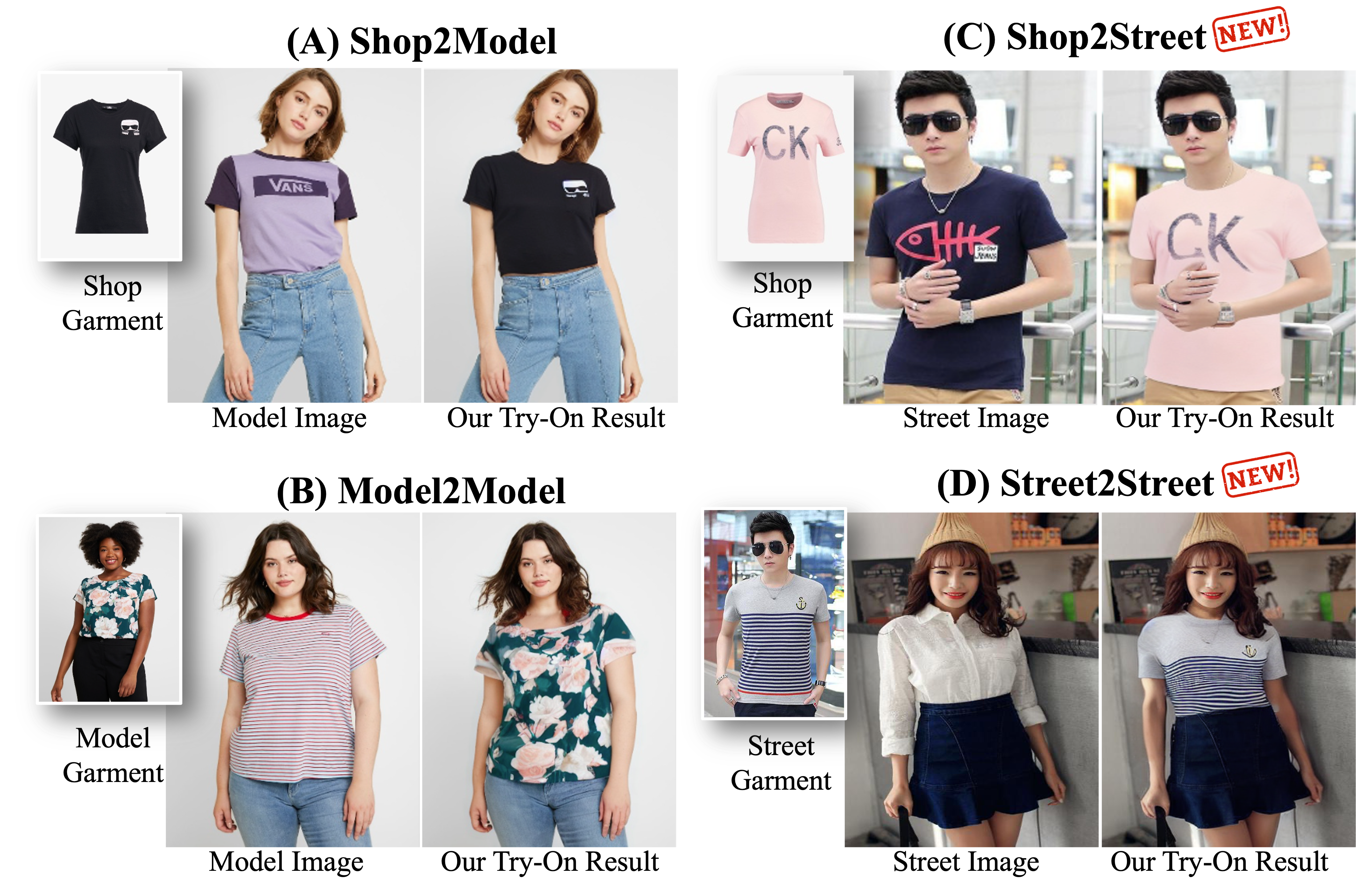

Virtual try-on, i.e making people virtually try new garments, is an active research area in computer vision with great commercial applications. Current virtual try-on methods usually work in a two-stage pipeline. First, the garment image is warped on the person's pose using a flow estimation network. Then in the second stage, the warped garment is fused with the person image to render a new try-on image. Unfortunately, such methods are heavily dependent on the quality of the garment warping which often fails when dealing with hard poses (e.g., a person lifting or crossing arms). In this work, we propose a robust warping method for virtual try-on based on a learned garment DensePose which has a direct correspondence with the person's DensePose. Due to the lack of annotated data, we show how to leverage an off-the-shelf person DensePose model and a pretrained flow model to learn the garment DensePose in a weakly supervised manner. The garment DensePose allows a robust warping to any person's pose without any additional computation. Our method achieves the state-of-the-art equivalent on virtual try-on benchmarks and shows warping robustness on in-the-wild person images with hard poses, making it more suited for real-world virtual try-on applications.

PDF Abstract

DensePose

DensePose

VITON-HD

VITON-HD