Generative Latent Implicit Conditional Optimization when Learning from Small Sample

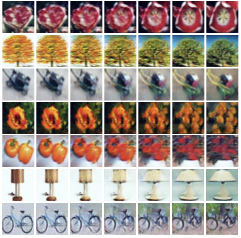

We revisit the long-standing problem of learning from a small sample, to which end we propose a novel method called GLICO (Generative Latent Implicit Conditional Optimization). GLICO learns a mapping from the training examples to a latent space and a generator that generates images from vectors in the latent space. Unlike most recent works, which rely on access to large amounts of unlabeled data, GLICO does not require access to any additional data other than the small set of labeled points. In fact, GLICO learns to synthesize completely new samples for every class using as little as 5 or 10 examples per class, with as few as 10 such classes without imposing any prior. GLICO is then used to augment the small training set while training a classifier on the small sample. To this end, our proposed method samples the learned latent space using spherical interpolation, and generates new examples using the trained generator. Empirical results show that the new sampled set is diverse enough, leading to improvement in image classification in comparison with the state of the art, when trained on small samples obtained from CIFAR-10, CIFAR-100, and CUB-200.

PDF AbstractDatasets

Results from the Paper

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Small Data Image Classification | CIFAR-100, 1000 Labels | GLICO | Accuracy | 28.55 | # 3 | |

| Small Data Image Classification | CIFAR-10, 250 Labels | GLICO | Top-1 accuracy % | 43 | # 1 | |

| Small Data Image Classification | CIFAR-10, 500 Labels | GLICO | Accuracy (%) | 56.22 | # 6 | |

| Small Data Image Classification | CUB-200-2011, 30 samples per class | GLICO | Accuracy | 77.75 | # 1 | |

| Small Data Image Classification | CUB-200-2011, 5 samples per class | GLICO | Accuracy | 51.52 | # 1 |

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

CUB-200-2011

CUB-200-2011

DEIC Benchmark

DEIC Benchmark