Learning Efficient GANs for Image Translation via Differentiable Masks and co-Attention Distillation

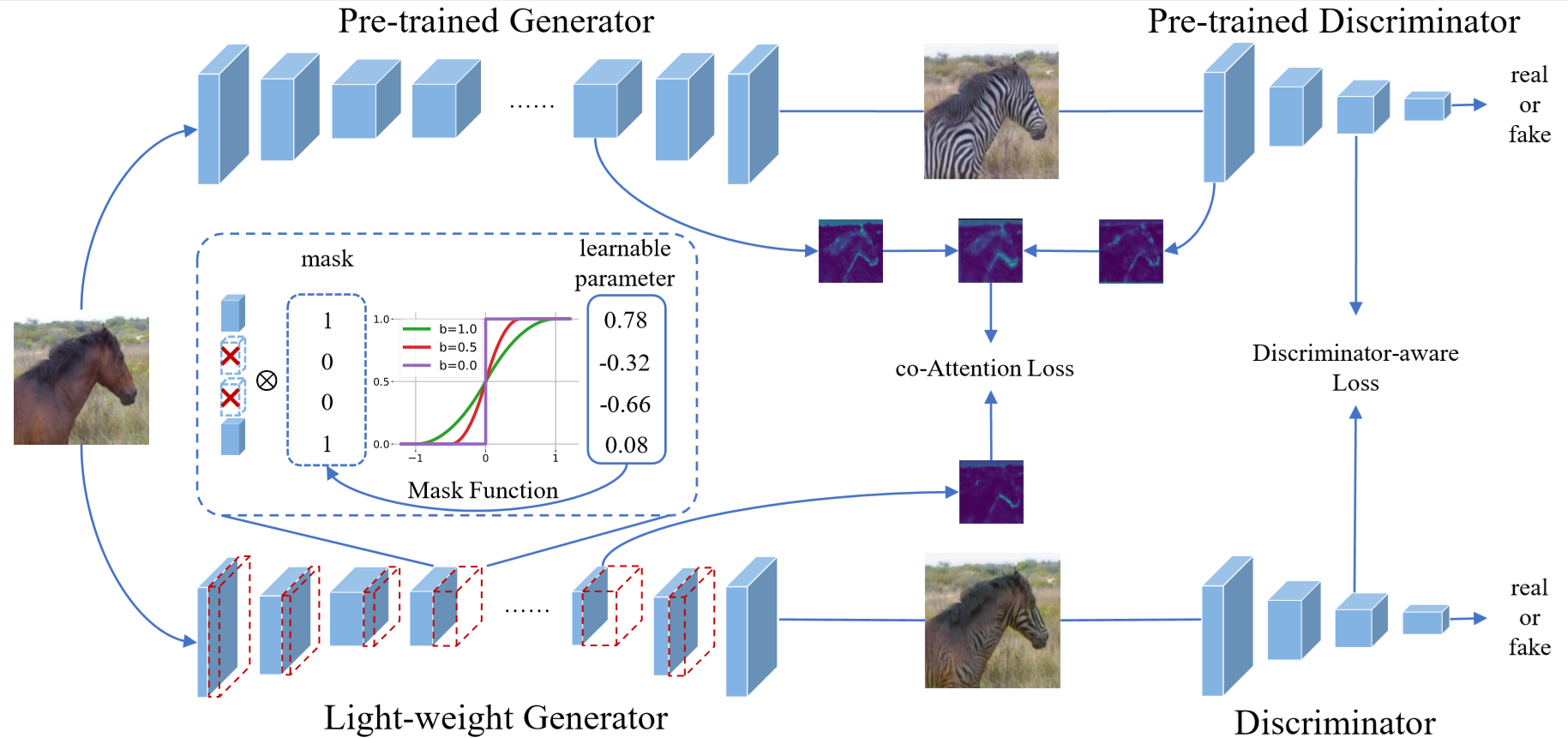

Generative Adversarial Networks (GANs) have been widely-used in image translation, but their high computation and storage costs impede the deployment on mobile devices. Prevalent methods for CNN compression cannot be directly applied to GANs due to the peculiarties of GAN tasks and the unstable adversarial training. To solve these, in this paper, we introduce a novel GAN compression method, termed DMAD, by proposing a Differentiable Mask and a co-Attention Distillation. The former searches for a light-weight generator architecture in a training-adaptive manner. To overcome channel inconsistency when pruning the residual connections, an adaptive cross-block group sparsity is further incorporated. The latter simultaneously distills informative attention maps from both the generator and discriminator of a pre-trained model to the searched generator, effectively stabilizing the adversarial training of our light-weight model. Experiments show that DMAD can reduce the Multiply Accumulate Operations (MACs) of CycleGAN by 13x and that of Pix2Pix by 4x while retaining a comparable performance against the full model. Our code can be available at https://github.com/SJLeo/DMAD.

PDF Abstract

CIFAR-10

CIFAR-10

Cityscapes

Cityscapes

CelebA

CelebA