Learning Adaptive Classifiers Synthesis for Generalized Few-Shot Learning

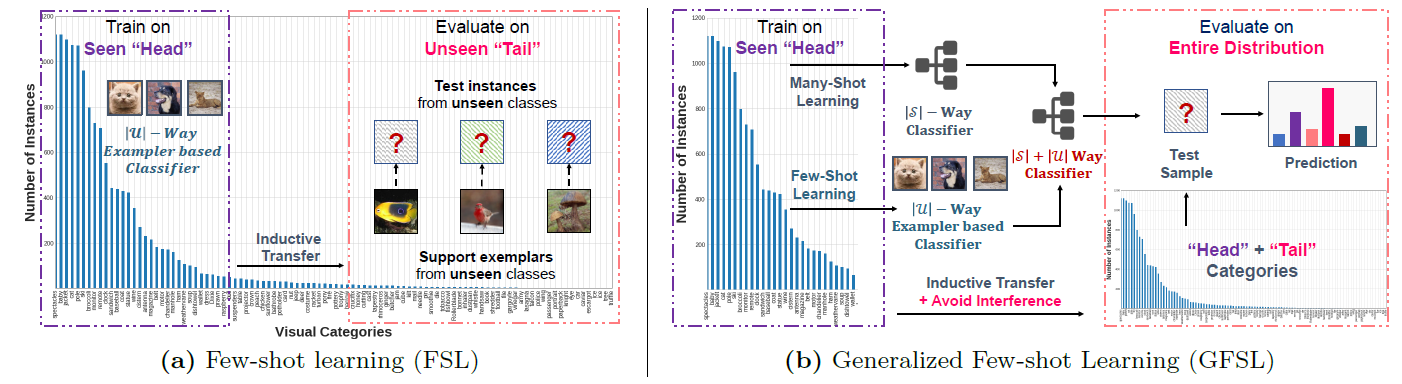

Object recognition in the real-world requires handling long-tailed or even open-ended data. An ideal visual system needs to recognize the populated head visual concepts reliably and meanwhile efficiently learn about emerging new tail categories with a few training instances. Class-balanced many-shot learning and few-shot learning tackle one side of this problem, by either learning strong classifiers for head or learning to learn few-shot classifiers for the tail. In this paper, we investigate the problem of generalized few-shot learning (GFSL) -- a model during the deployment is required to learn about tail categories with few shots and simultaneously classify the head classes. We propose the ClAssifier SynThesis LEarning (CASTLE), a learning framework that learns how to synthesize calibrated few-shot classifiers in addition to the multi-class classifiers of head classes with a shared neural dictionary, shedding light upon the inductive GFSL. Furthermore, we propose an adaptive version of CASTLE (ACASTLE) that adapts the head classifiers conditioned on the incoming tail training examples, yielding a framework that allows effective backward knowledge transfer. As a consequence, ACASTLE can handle GFSL with classes from heterogeneous domains effectively. CASTLE and ACASTLE demonstrate superior performances than existing GFSL algorithms and strong baselines on MiniImageNet as well as TieredImageNet datasets. More interestingly, they outperform previous state-of-the-art methods when evaluated with standard few-shot learning criteria.

PDF Abstract

Office-Home

Office-Home

tieredImageNet

tieredImageNet