Keep your Eyes on the Lane: Real-time Attention-guided Lane Detection

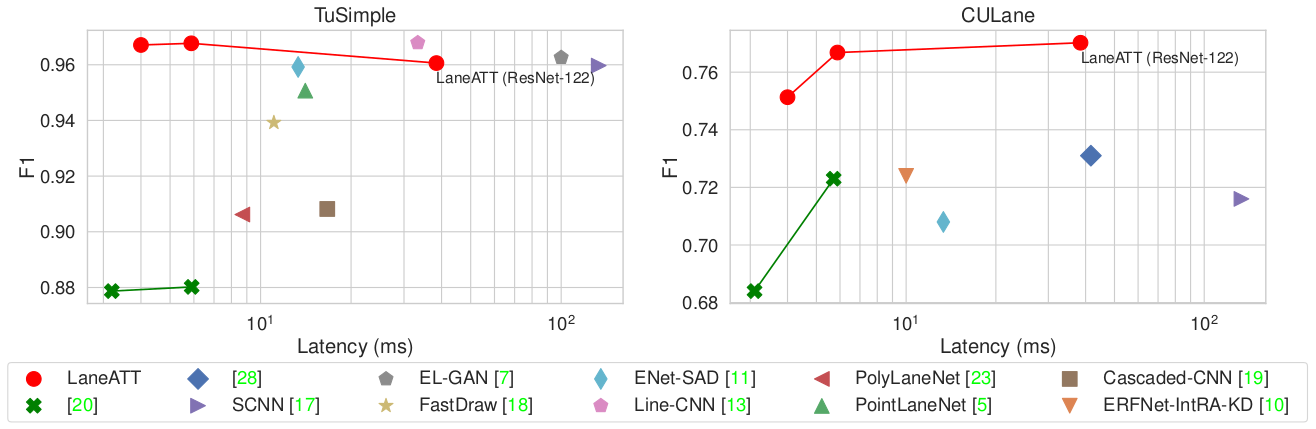

Modern lane detection methods have achieved remarkable performances in complex real-world scenarios, but many have issues maintaining real-time efficiency, which is important for autonomous vehicles. In this work, we propose LaneATT: an anchor-based deep lane detection model, which, akin to other generic deep object detectors, uses the anchors for the feature pooling step. Since lanes follow a regular pattern and are highly correlated, we hypothesize that in some cases global information may be crucial to infer their positions, especially in conditions such as occlusion, missing lane markers, and others. Thus, this work proposes a novel anchor-based attention mechanism that aggregates global information. The model was evaluated extensively on three of the most widely used datasets in the literature. The results show that our method outperforms the current state-of-the-art methods showing both higher efficacy and efficiency. Moreover, an ablation study is performed along with a discussion on efficiency trade-off options that are useful in practice.

PDF Abstract CVPR 2021 PDF CVPR 2021 AbstractCode

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Lane Detection | CULane | LaneATT (ResNet-122) | F1 score | 77.02 | # 31 | |

| Lane Detection | CULane | LaneATT (ResNet-18) | F1 score | 75.13 | # 38 | |

| Lane Detection | CULane | LaneATT (ResNet-34) | F1 score | 76.68 | # 32 | |

| Lane Detection | LLAMAS | LaneATT (ResNet-34) | F1 | 0.9374 | # 6 | |

| Lane Detection | LLAMAS | LaneATT (ResNet-122) | F1 | 0.9354 | # 7 | |

| Lane Detection | LLAMAS | LaneATT (ResNet-18) | F1 | 0.9346 | # 8 | |

| Lane Detection | TuSimple | LaneATT (ResNet-18) | Accuracy | 95.57% | # 29 | |

| F1 score | 96.71 | # 16 | ||||

| Lane Detection | TuSimple | LaneATT (ResNet-34) | Accuracy | 95.63% | # 27 | |

| F1 score | 96.77 | # 15 | ||||

| Lane Detection | TuSimple | LaneATT (ResNet-122) | Accuracy | 96.10% | # 22 | |

| F1 score | 96.06 | # 24 |

CULane

CULane

TuSimple

TuSimple

LLAMAS

LLAMAS