Is Mamba Effective for Time Series Forecasting?

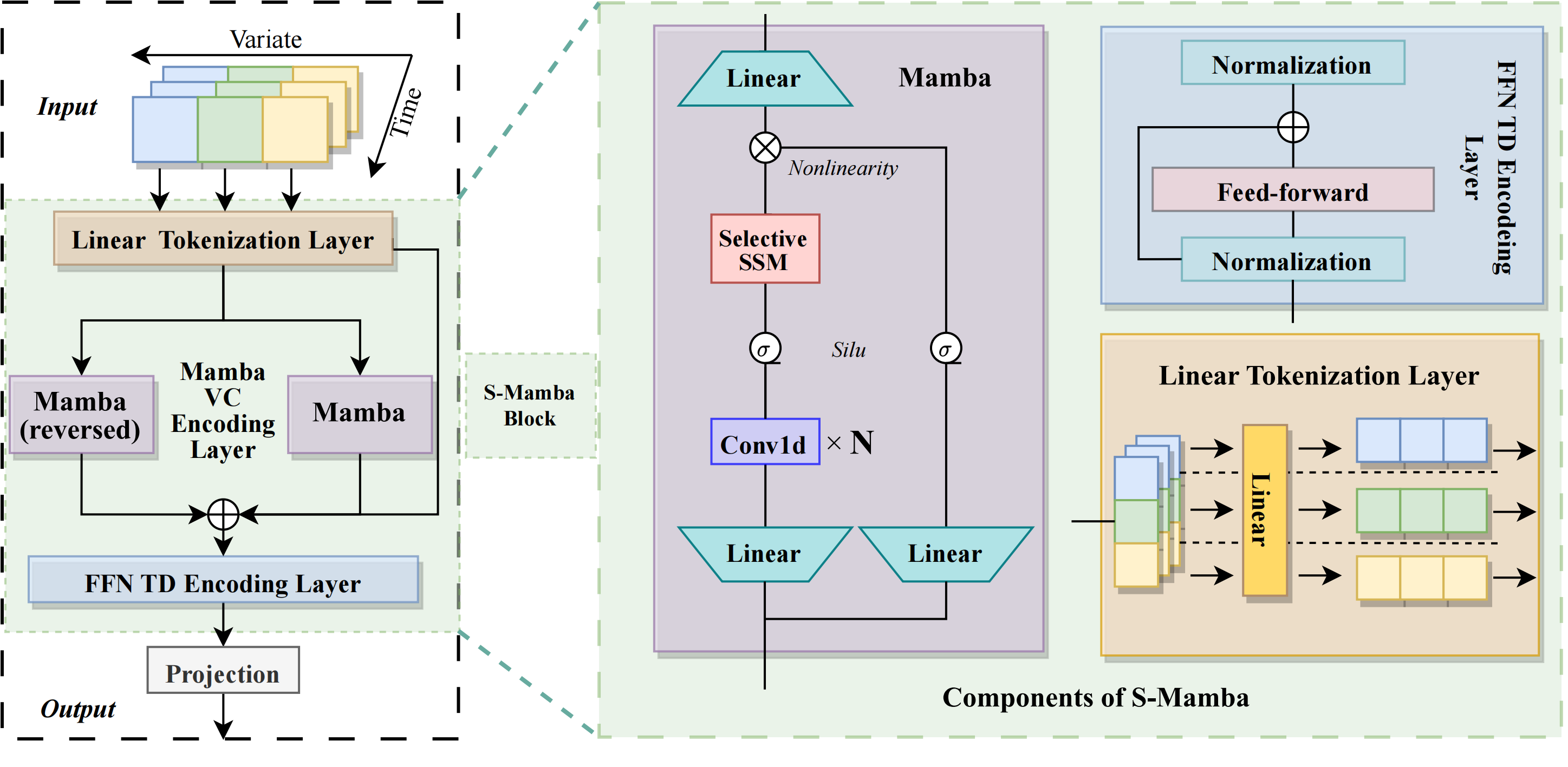

In the realm of time series forecasting (TSF), it is imperative for models to adeptly discern and distill dependencies embedded within historical time series data. This encompasses the extraction of temporal dependencies and inter-variate correlations (VC), thereby empowering the models to forecast future states. Transformer-based models have exhibited formidable efficacy in TSF, primarily attributed to their distinct proficiency in apprehending both TD and VC. However, due to the inefficiencies, ongoing efforts to refine the Transformer persist. Recently, state space models (SSMs), e.g. Mamba, have gained traction due to their ability to process complex dependencies in sequences, similar to the Transformer, while maintaining near-linear complexity. This has piqued our interest in exploring SSM's potential in TSF tasks. Therefore, we propose a Mamba-based model named Simple-Mamba (S-Mamba) for TSF. Specifically, we tokenize the time points of each variate autonomously via a linear layer. Subsequently, a bidirectional Mamba layer is utilized to extract VC, followed by the generation of forecast outcomes through a composite structure of a Feed-Forward Network for TD and a mapping layer. Experiments on several datasets prove that S-Mamba maintains low computational overhead and achieves leading performance. Furthermore, we conduct extensive experiments to delve deeper into the potential of Mamba compared to the Transformer in the TSF. Our code is available at https://github.com/wzhwzhwzh0921/S-D-Mamba.

PDF Abstract

ETT

ETT