Is Adversarial Training with Compressed Datasets Effective?

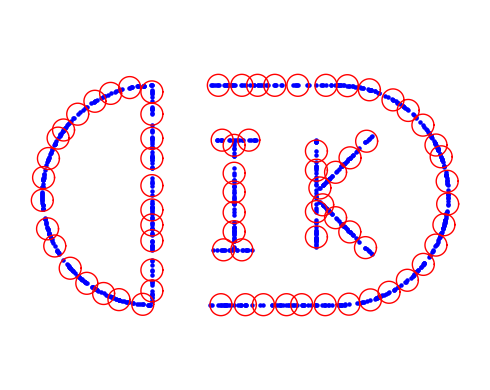

Dataset Condensation (DC) refers to the recent class of dataset compression methods that generate a smaller, synthetic, dataset from a larger dataset. This synthetic dataset retains the essential information of the original dataset, enabling models trained on it to achieve performance levels comparable to those trained on the full dataset. Most current DC methods have mainly concerned with achieving high test performance with limited data budget, and have not directly addressed the question of adversarial robustness. In this work, we investigate the impact of adversarial robustness on models trained with compressed datasets. We show that the compressed datasets obtained from DC methods are not effective in transferring adversarial robustness to models. As a solution to improve dataset compression efficiency and adversarial robustness simultaneously, we propose a novel robustness-aware dataset compression method based on finding the Minimal Finite Covering (MFC) of the dataset. The proposed method is (1) obtained by one-time computation and is applicable for any model, (2) more effective than DC methods when applying adversarial training over MFC, (3) provably robust by minimizing the generalized adversarial loss. Additionally, empirical evaluation on three datasets shows that the proposed method is able to achieve better robustness and performance trade-off compared to DC methods such as distribution matching.

PDF Abstract

CIFAR-10

CIFAR-10

MNIST

MNIST