From latent dynamics to meaningful representations

While representation learning has been central to the rise of machine learning and artificial intelligence, a key problem remains in making the learned representations meaningful. For this, the typical approach is to regularize the learned representation through prior probability distributions. However, such priors are usually unavailable or are ad hoc. To deal with this, recent efforts have shifted towards leveraging the insights from physical principles to guide the learning process. In this spirit, we propose a purely dynamics-constrained representation learning framework. Instead of relying on predefined probabilities, we restrict the latent representation to follow overdamped Langevin dynamics with a learnable transition density - a prior driven by statistical mechanics. We show this is a more natural constraint for representation learning in stochastic dynamical systems, with the crucial ability to uniquely identify the ground truth representation. We validate our framework for different systems including a real-world fluorescent DNA movie dataset. We show that our algorithm can uniquely identify orthogonal, isometric and meaningful latent representations.

PDF Abstract

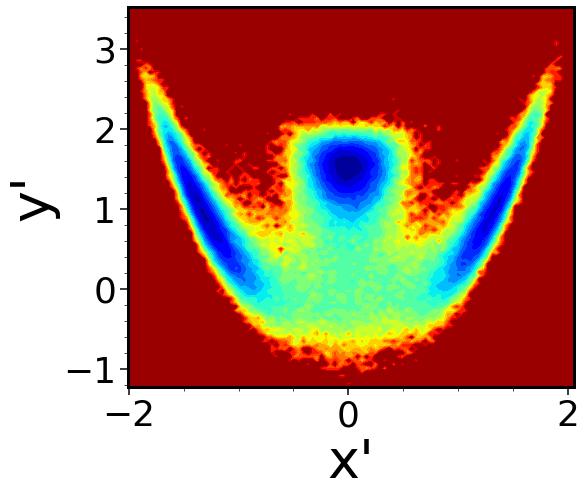

dSprites

dSprites