Integrating Mamba Sequence Model and Hierarchical Upsampling Network for Accurate Semantic Segmentation of Multiple Sclerosis Legion

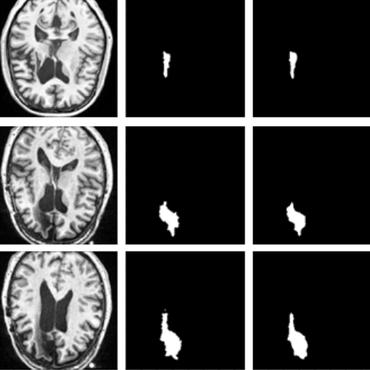

Integrating components from convolutional neural networks and state space models in medical image segmentation presents a compelling approach to enhance accuracy and efficiency. We introduce Mamba HUNet, a novel architecture tailored for robust and efficient segmentation tasks. Leveraging strengths from Mamba UNet and the lighter version of Hierarchical Upsampling Network (HUNet), Mamba HUNet combines convolutional neural networks local feature extraction power with state space models long range dependency modeling capabilities. We first converted HUNet into a lighter version, maintaining performance parity and then integrated this lighter HUNet into Mamba HUNet, further enhancing its efficiency. The architecture partitions input grayscale images into patches, transforming them into 1D sequences for processing efficiency akin to Vision Transformers and Mamba models. Through Visual State Space blocks and patch merging layers, hierarchical features are extracted while preserving spatial information. Experimental results on publicly available Magnetic Resonance Imaging scans, notably in Multiple Sclerosis lesion segmentation, demonstrate Mamba HUNet's effectiveness across diverse segmentation tasks. The model's robustness and flexibility underscore its potential in handling complex anatomical structures. These findings establish Mamba HUNet as a promising solution in advancing medical image segmentation, with implications for improving clinical decision making processes.

PDF Abstract