HoughNet: Integrating near and long-range evidence for bottom-up object detection

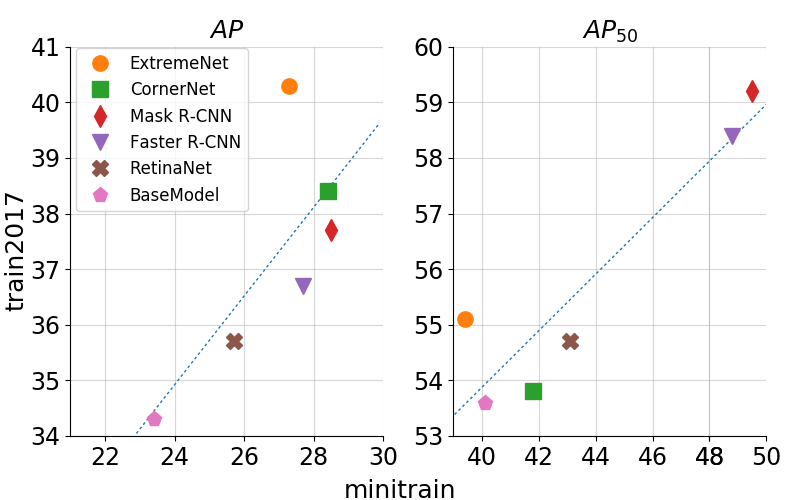

This paper presents HoughNet, a one-stage, anchor-free, voting-based, bottom-up object detection method. Inspired by the Generalized Hough Transform, HoughNet determines the presence of an object at a certain location by the sum of the votes cast on that location. Votes are collected from both near and long-distance locations based on a log-polar vote field. Thanks to this voting mechanism, HoughNet is able to integrate both near and long-range, class-conditional evidence for visual recognition, thereby generalizing and enhancing current object detection methodology, which typically relies on only local evidence. On the COCO dataset, HoughNet's best model achieves 46.4 $AP$ (and 65.1 $AP_{50}$), performing on par with the state-of-the-art in bottom-up object detection and outperforming most major one-stage and two-stage methods. We further validate the effectiveness of our proposal in another task, namely, "labels to photo" image generation by integrating the voting module of HoughNet to two different GAN models and showing that the accuracy is significantly improved in both cases. Code is available at https://github.com/nerminsamet/houghnet.

PDF Abstract ECCV 2020 PDF ECCV 2020 AbstractDatasets

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Object Detection | COCO minival | HoughNet (HG-104, MS) | box AP | 46.1 | # 101 | |

| AP50 | 64.6 | # 38 | ||||

| AP75 | 50.3 | # 30 | ||||

| APS | 30.0 | # 17 | ||||

| APM | 48.8 | # 23 | ||||

| APL | 59.7 | # 29 | ||||

| Object Detection | COCO minival | HoughNet (HG-104) | box AP | 43.0 | # 134 | |

| AP50 | 62.2 | # 60 | ||||

| AP75 | 46.9 | # 48 | ||||

| APS | 25.5 | # 46 | ||||

| APM | 47.6 | # 33 | ||||

| APL | 55.8 | # 55 | ||||

| Object Detection | COCO test-dev | HoughNet (MS) | box mAP | 46.4 | # 110 | |

| AP50 | 65.1 | # 68 | ||||

| AP75 | 50.7 | # 63 | ||||

| APS | 29.1 | # 54 | ||||

| APM | 48.5 | # 65 | ||||

| APL | 58.1 | # 61 | ||||

| Hardware Burden | None | # 1 | ||||

| Operations per network pass | None | # 1 |

MS COCO

MS COCO

Cityscapes

Cityscapes