Guarding Barlow Twins Against Overfitting with Mixed Samples

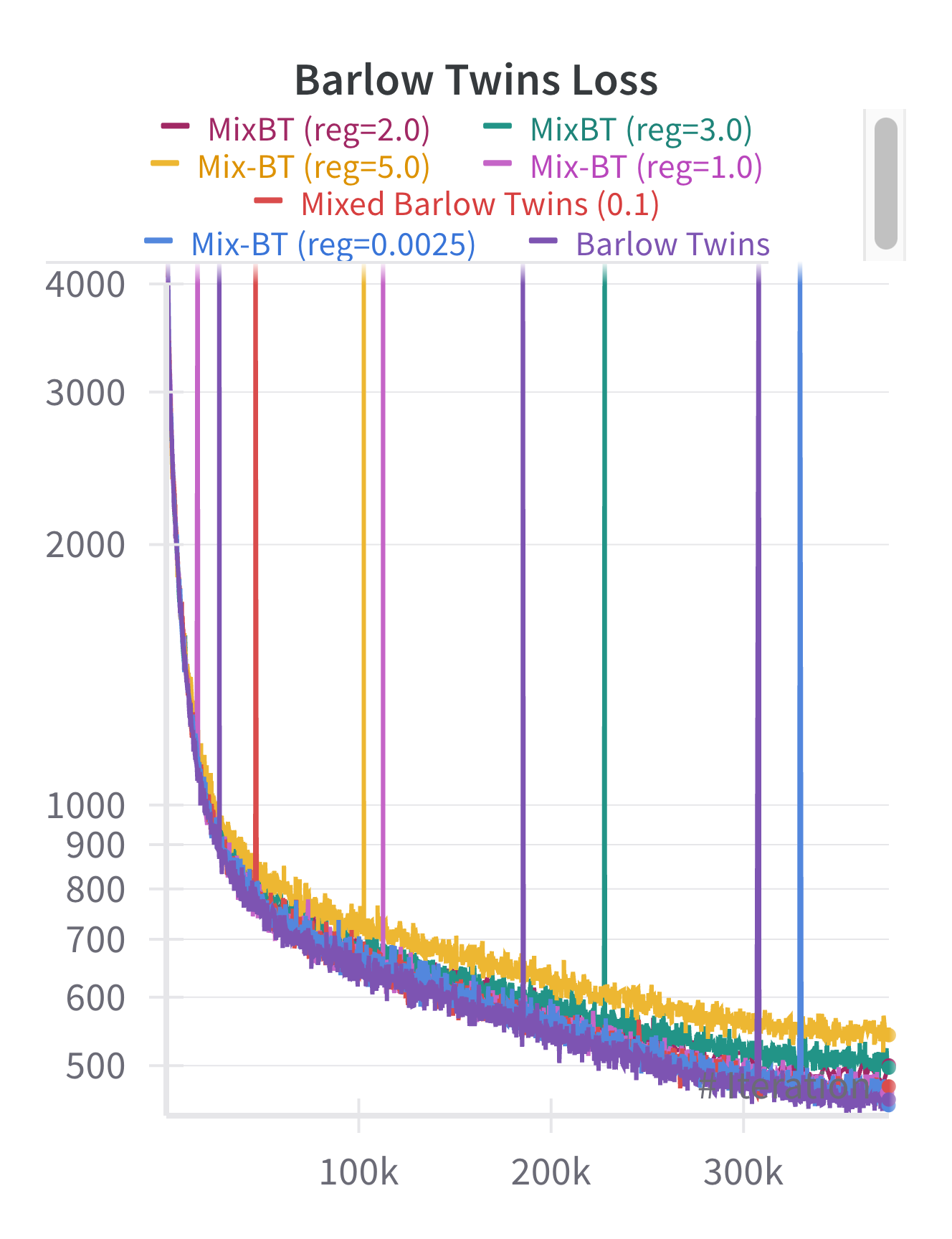

Self-supervised Learning (SSL) aims to learn transferable feature representations for downstream applications without relying on labeled data. The Barlow Twins algorithm, renowned for its widespread adoption and straightforward implementation compared to its counterparts like contrastive learning methods, minimizes feature redundancy while maximizing invariance to common corruptions. Optimizing for the above objective forces the network to learn useful representations, while avoiding noisy or constant features, resulting in improved downstream task performance with limited adaptation. Despite Barlow Twins' proven effectiveness in pre-training, the underlying SSL objective can inadvertently cause feature overfitting due to the lack of strong interaction between the samples unlike the contrastive learning approaches. From our experiments, we observe that optimizing for the Barlow Twins objective doesn't necessarily guarantee sustained improvements in representation quality beyond a certain pre-training phase, and can potentially degrade downstream performance on some datasets. To address this challenge, we introduce Mixed Barlow Twins, which aims to improve sample interaction during Barlow Twins training via linearly interpolated samples. This results in an additional regularization term to the original Barlow Twins objective, assuming linear interpolation in the input space translates to linearly interpolated features in the feature space. Pre-training with this regularization effectively mitigates feature overfitting and further enhances the downstream performance on CIFAR-10, CIFAR-100, TinyImageNet, STL-10, and ImageNet datasets. The code and checkpoints are available at: https://github.com/wgcban/mix-bt.git

PDF AbstractDatasets

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Self-Supervised Learning | cifar10 | ResNet18 | average top-1 classification accuracy | 92.58 | # 2 | |

| Self-Supervised Learning | cifar10 | ResNet50 | average top-1 classification accuracy | 93.89 | # 1 | |

| Self-Supervised Learning | cifar100 | ResNet50 | average top-1 classification accuracy | 72.51 | # 1 | |

| Self-Supervised Learning | cifar100 | ResNet18 | average top-1 classification accuracy | 69.31 | # 2 | |

| Self-Supervised Learning | STL-10 | ResNet50 | Accuracy | 91.70 | # 1 | |

| Self-Supervised Learning | STL-10 | ResNet18 | Accuracy | 91.02 | # 2 | |

| Self-Supervised Learning | TinyImageNet | ResNet50 | average top-1 classification accuracy | 51.84 | # 2 | |

| Self-Supervised Learning | TinyImageNet | ResNet18 | average top-1 classification accuracy | 51.67 | # 1 |

CIFAR-10

CIFAR-10

ImageNet

ImageNet

CIFAR-100

CIFAR-100

Fashion-MNIST

Fashion-MNIST

STL-10

STL-10

Tiny ImageNet

Tiny ImageNet

DTD

DTD