Glow: Generative Flow with Invertible 1x1 Convolutions

Flow-based generative models (Dinh et al., 2014) are conceptually attractive due to tractability of the exact log-likelihood, tractability of exact latent-variable inference, and parallelizability of both training and synthesis. In this paper we propose Glow, a simple type of generative flow using an invertible 1x1 convolution. Using our method we demonstrate a significant improvement in log-likelihood on standard benchmarks. Perhaps most strikingly, we demonstrate that a generative model optimized towards the plain log-likelihood objective is capable of efficient realistic-looking synthesis and manipulation of large images. The code for our model is available at https://github.com/openai/glow

PDF Abstract NeurIPS 2018 PDF NeurIPS 2018 Abstract

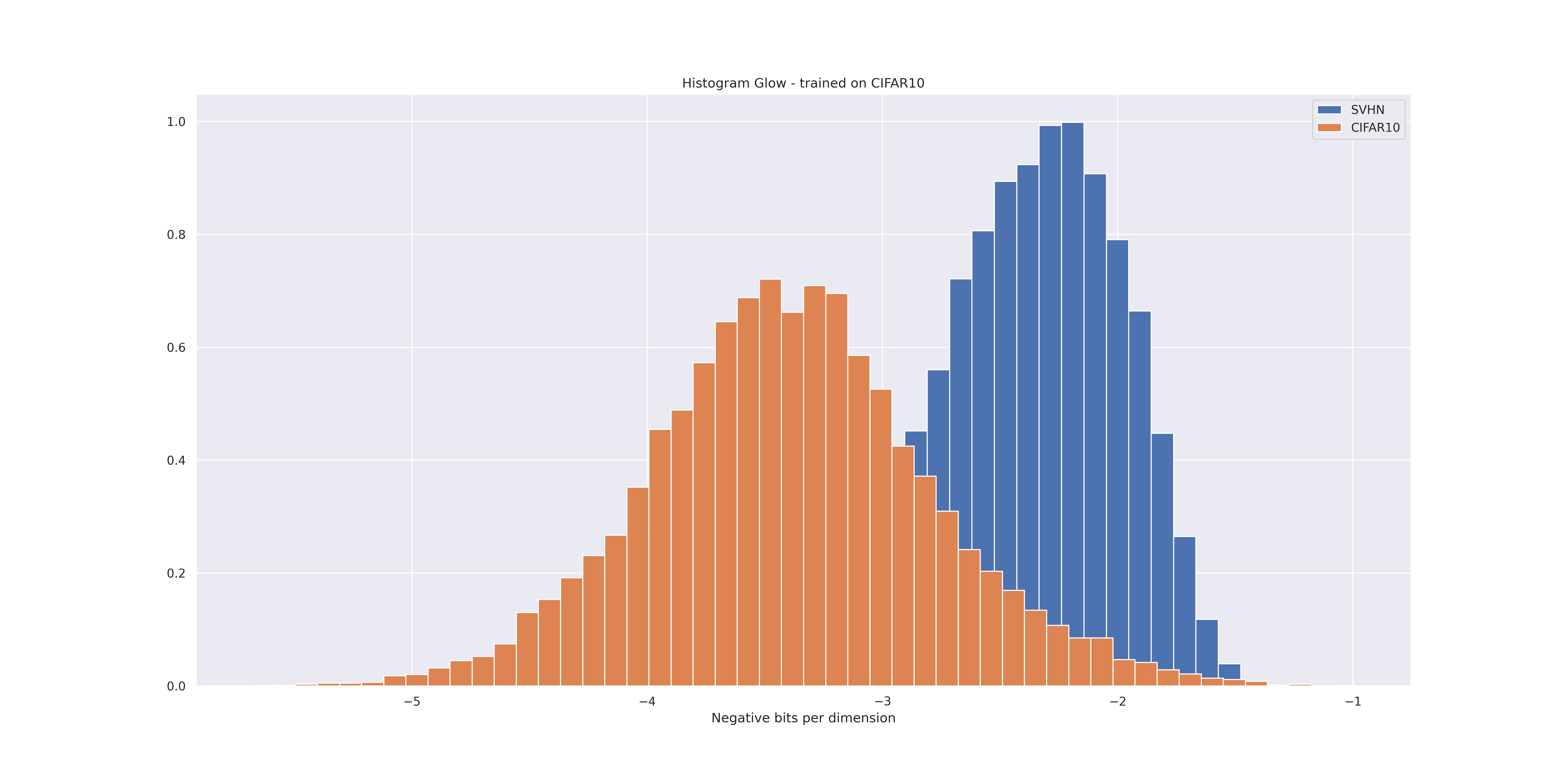

CIFAR-10

CIFAR-10

CelebA

CelebA

CelebA-HQ

CelebA-HQ

LSUN

LSUN