Generative Low-bitwidth Data Free Quantization

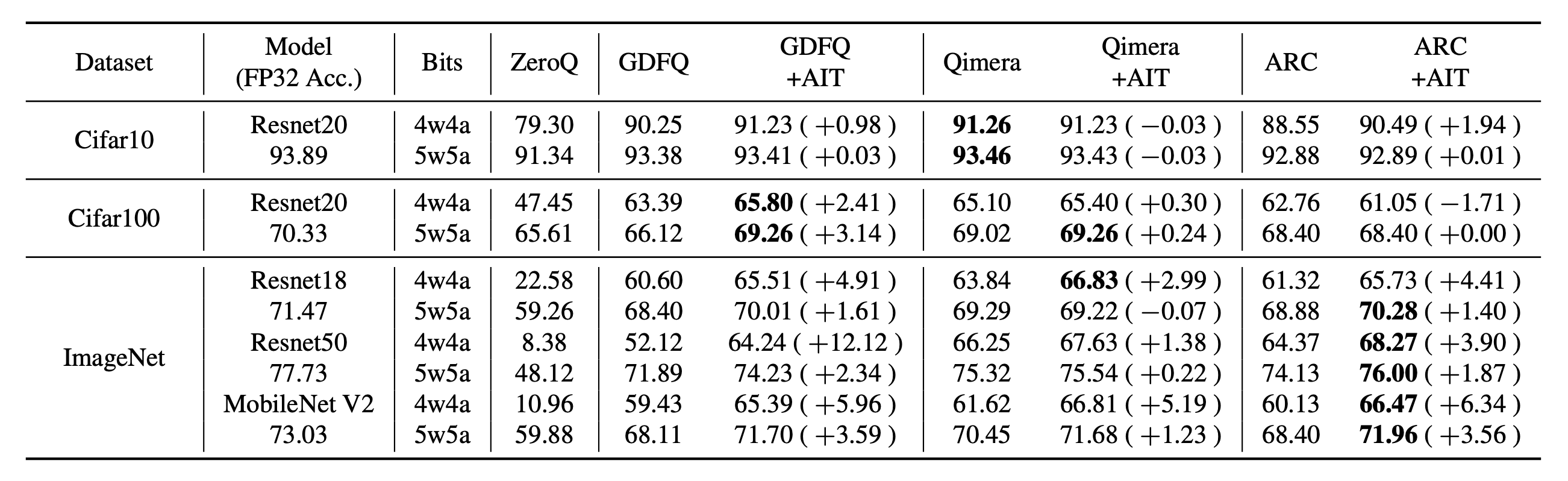

Neural network quantization is an effective way to compress deep models and improve their execution latency and energy efficiency, so that they can be deployed on mobile or embedded devices. Existing quantization methods require original data for calibration or fine-tuning to get better performance. However, in many real-world scenarios, the data may not be available due to confidential or private issues, thereby making existing quantization methods not applicable. Moreover, due to the absence of original data, the recently developed generative adversarial networks (GANs) cannot be applied to generate data. Although the full-precision model may contain rich data information, such information alone is hard to exploit for recovering the original data or generating new meaningful data. In this paper, we investigate a simple-yet-effective method called Generative Low-bitwidth Data Free Quantization (GDFQ) to remove the data dependence burden. Specifically, we propose a knowledge matching generator to produce meaningful fake data by exploiting classification boundary knowledge and distribution information in the pre-trained model. With the help of generated data, we can quantize a model by learning knowledge from the pre-trained model. Extensive experiments on three data sets demonstrate the effectiveness of our method. More critically, our method achieves much higher accuracy on 4-bit quantization than the existing data free quantization method. Code is available at https://github.com/xushoukai/GDFQ.

PDF Abstract ECCV 2020 PDF ECCV 2020 Abstract

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100