Flow-based Video Segmentation for Human Head and Shoulders

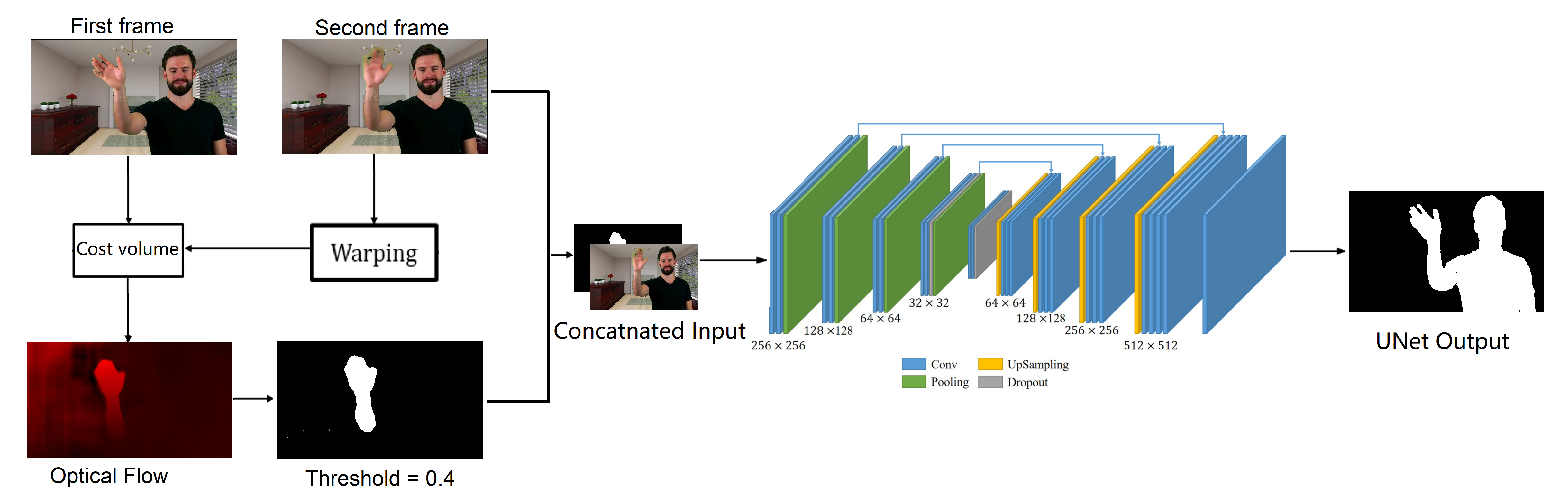

Video segmentation for the human head and shoulders is essential in creating elegant media for videoconferencing and virtual reality applications. The main challenge is to process high-quality background subtraction in a real-time manner and address the segmentation issues under motion blurs, e.g., shaking the head or waving hands during conference video. To overcome the motion blur problem in video segmentation, we propose a novel flow-based encoder-decoder network (FUNet) that combines both traditional Horn-Schunck optical-flow estimation technique and convolutional neural networks to perform robust real-time video segmentation. We also introduce a video and image segmentation dataset: ConferenceVideoSegmentationDataset. Code and pre-trained models are available on our GitHub repository: \url{https://github.com/kuangzijian/Flow-Based-Video-Matting}.

PDF AbstractDatasets

Introduced in the Paper:

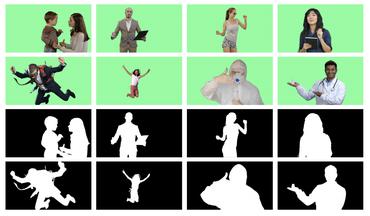

ConferenceVideoSegmentationDataset

ConferenceVideoSegmentationDataset