FedBEVT: Federated Learning Bird's Eye View Perception Transformer in Road Traffic Systems

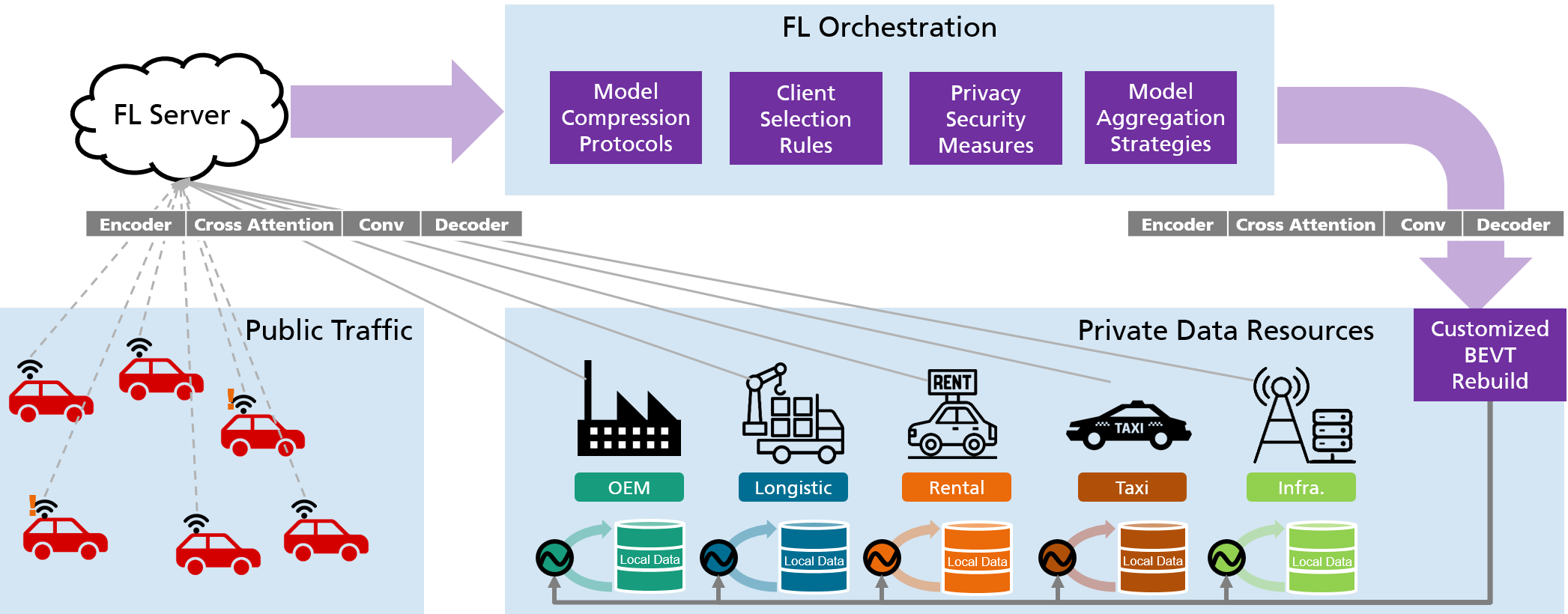

Bird's eye view (BEV) perception is becoming increasingly important in the field of autonomous driving. It uses multi-view camera data to learn a transformer model that directly projects the perception of the road environment onto the BEV perspective. However, training a transformer model often requires a large amount of data, and as camera data for road traffic are often private, they are typically not shared. Federated learning offers a solution that enables clients to collaborate and train models without exchanging data but model parameters. In this paper, we introduce FedBEVT, a federated transformer learning approach for BEV perception. In order to address two common data heterogeneity issues in FedBEVT: (i) diverse sensor poses, and (ii) varying sensor numbers in perception systems, we propose two approaches -- Federated Learning with Camera-Attentive Personalization (FedCaP) and Adaptive Multi-Camera Masking (AMCM), respectively. To evaluate our method in real-world settings, we create a dataset consisting of four typical federated use cases. Our findings suggest that FedBEVT outperforms the baseline approaches in all four use cases, demonstrating the potential of our approach for improving BEV perception in autonomous driving.

PDF Abstract

OPV2V

OPV2V