Feature Norm Regularized Federated Learning: Transforming Skewed Distributions into Global Insights

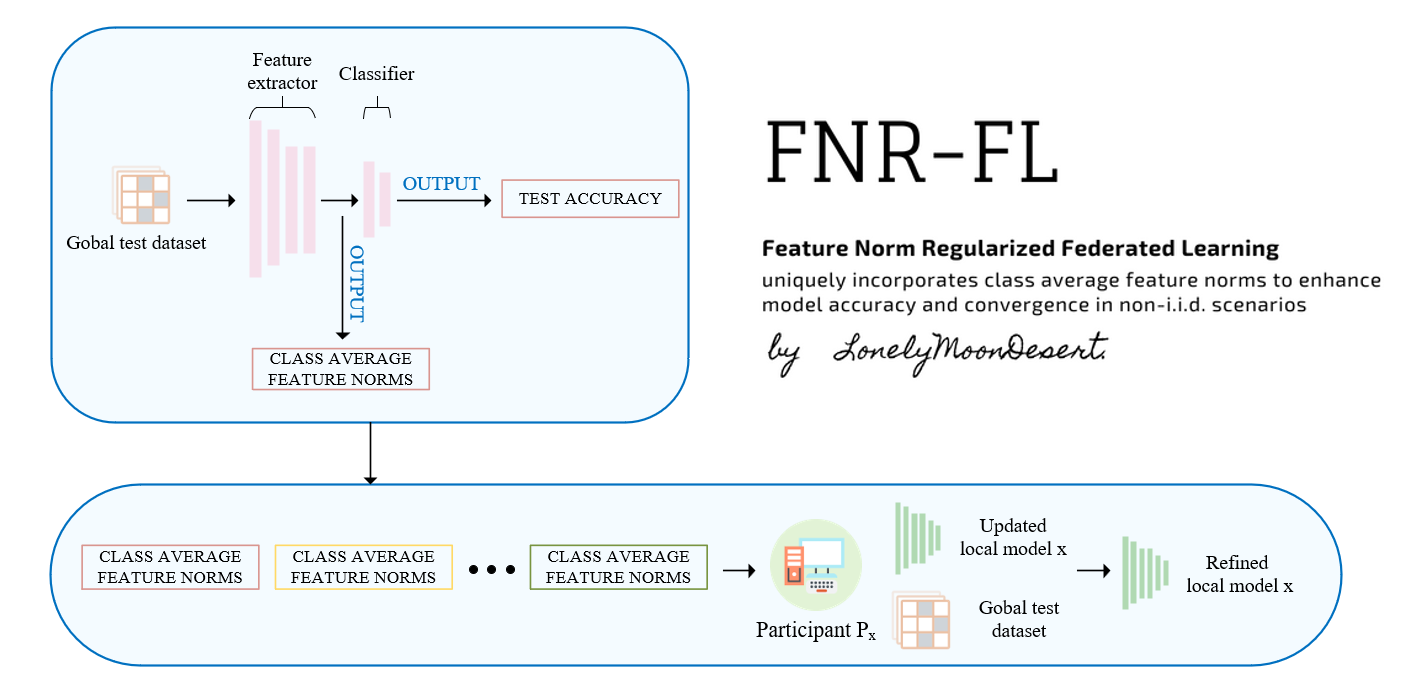

In the field of federated learning, addressing non-independent and identically distributed (non-i.i.d.) data remains a quintessential challenge for improving global model performance. This work introduces the Feature Norm Regularized Federated Learning (FNR-FL) algorithm, which uniquely incorporates class average feature norms to enhance model accuracy and convergence in non-i.i.d. scenarios. Our comprehensive analysis reveals that FNR-FL not only accelerates convergence but also significantly surpasses other contemporary federated learning algorithms in test accuracy, particularly under feature distribution skew scenarios. The novel modular design of FNR-FL facilitates seamless integration with existing federated learning frameworks, reinforcing its adaptability and potential for widespread application. We substantiate our claims through rigorous empirical evaluations, demonstrating FNR-FL's exceptional performance across various skewed data distributions. Relative to FedAvg, FNR-FL exhibits a substantial 66.24\% improvement in accuracy and a significant 11.40\% reduction in training time, underscoring its enhanced effectiveness and efficiency.

PDF Abstract

CIFAR-10

CIFAR-10