Exploring Modulated Detection Transformer as a Tool for Action Recognition in Videos

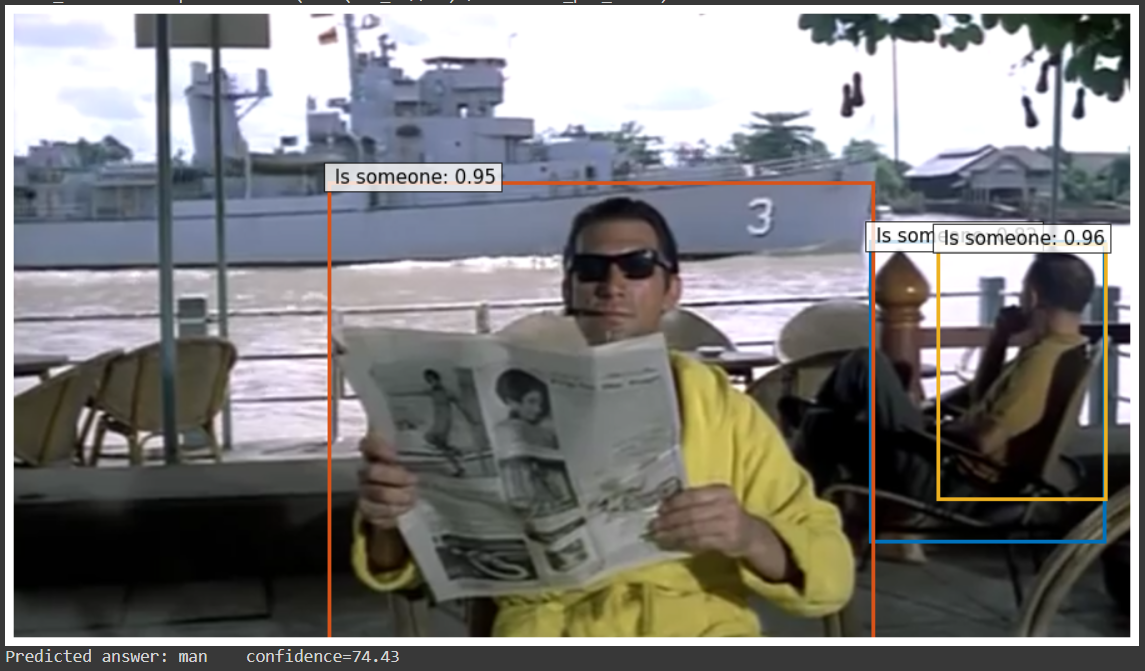

During recent years transformers architectures have been growing in popularity. Modulated Detection Transformer (MDETR) is an end-to-end multi-modal understanding model that performs tasks such as phase grounding, referring expression comprehension, referring expression segmentation, and visual question answering. One remarkable aspect of the model is the capacity to infer over classes that it was not previously trained for. In this work we explore the use of MDETR in a new task, action detection, without any previous training. We obtain quantitative results using the Atomic Visual Actions dataset. Although the model does not report the best performance in the task, we believe that it is an interesting finding. We show that it is possible to use a multi-modal model to tackle a task that it was not designed for. Finally, we believe that this line of research may lead into the generalization of MDETR in additional downstream tasks.

PDF Abstract