Evaluating Theory of Mind in Question Answering

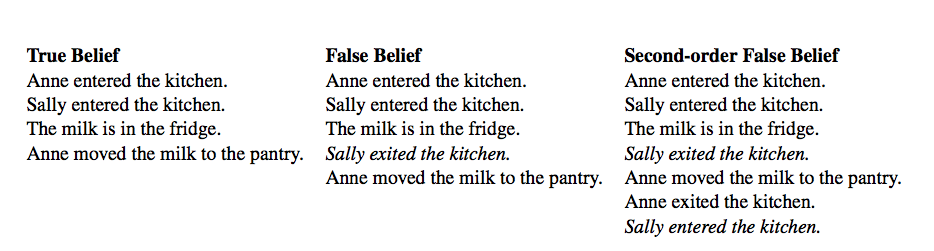

We propose a new dataset for evaluating question answering models with respect to their capacity to reason about beliefs. Our tasks are inspired by theory-of-mind experiments that examine whether children are able to reason about the beliefs of others, in particular when those beliefs differ from reality. We evaluate a number of recent neural models with memory augmentation. We find that all fail on our tasks, which require keeping track of inconsistent states of the world; moreover, the models' accuracy decreases notably when random sentences are introduced to the tasks at test.

PDF Abstract EMNLP 2018 PDF EMNLP 2018 Abstract

ToM QA

ToM QA

bAbI

bAbI