Equivariant Hypergraph Neural Networks

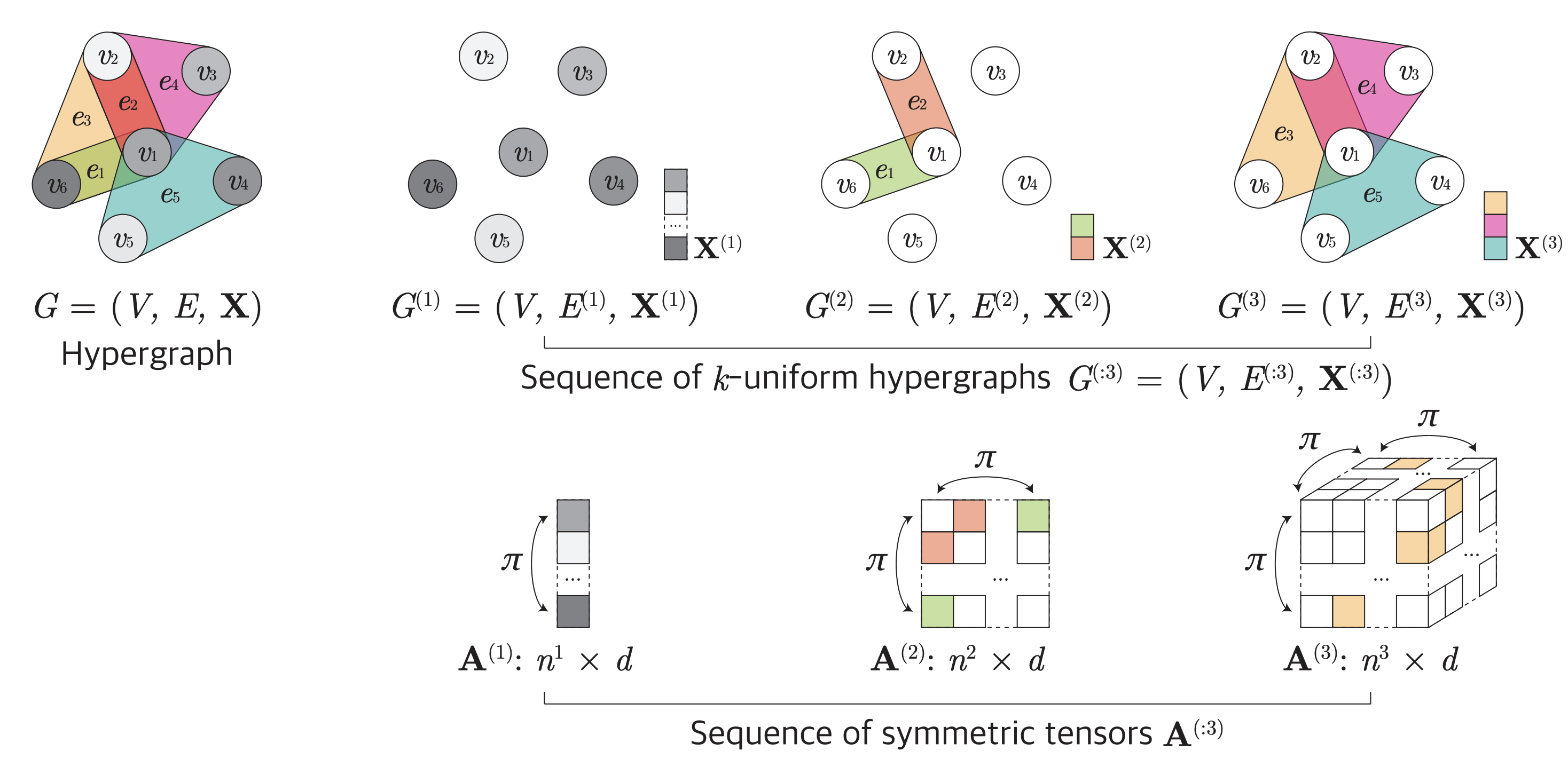

Many problems in computer vision and machine learning can be cast as learning on hypergraphs that represent higher-order relations. Recent approaches for hypergraph learning extend graph neural networks based on message passing, which is simple yet fundamentally limited in modeling long-range dependencies and expressive power. On the other hand, tensor-based equivariant neural networks enjoy maximal expressiveness, but their application has been limited in hypergraphs due to heavy computation and strict assumptions on fixed-order hyperedges. We resolve these problems and present Equivariant Hypergraph Neural Network (EHNN), the first attempt to realize maximally expressive equivariant layers for general hypergraph learning. We also present two practical realizations of our framework based on hypernetworks (EHNN-MLP) and self-attention (EHNN-Transformer), which are easy to implement and theoretically more expressive than most message passing approaches. We demonstrate their capability in a range of hypergraph learning problems, including synthetic k-edge identification, semi-supervised classification, and visual keypoint matching, and report improved performances over strong message passing baselines. Our implementation is available at https://github.com/jw9730/ehnn.

PDF Abstract