Ensemble of MRR and NDCG models for Visual Dialog

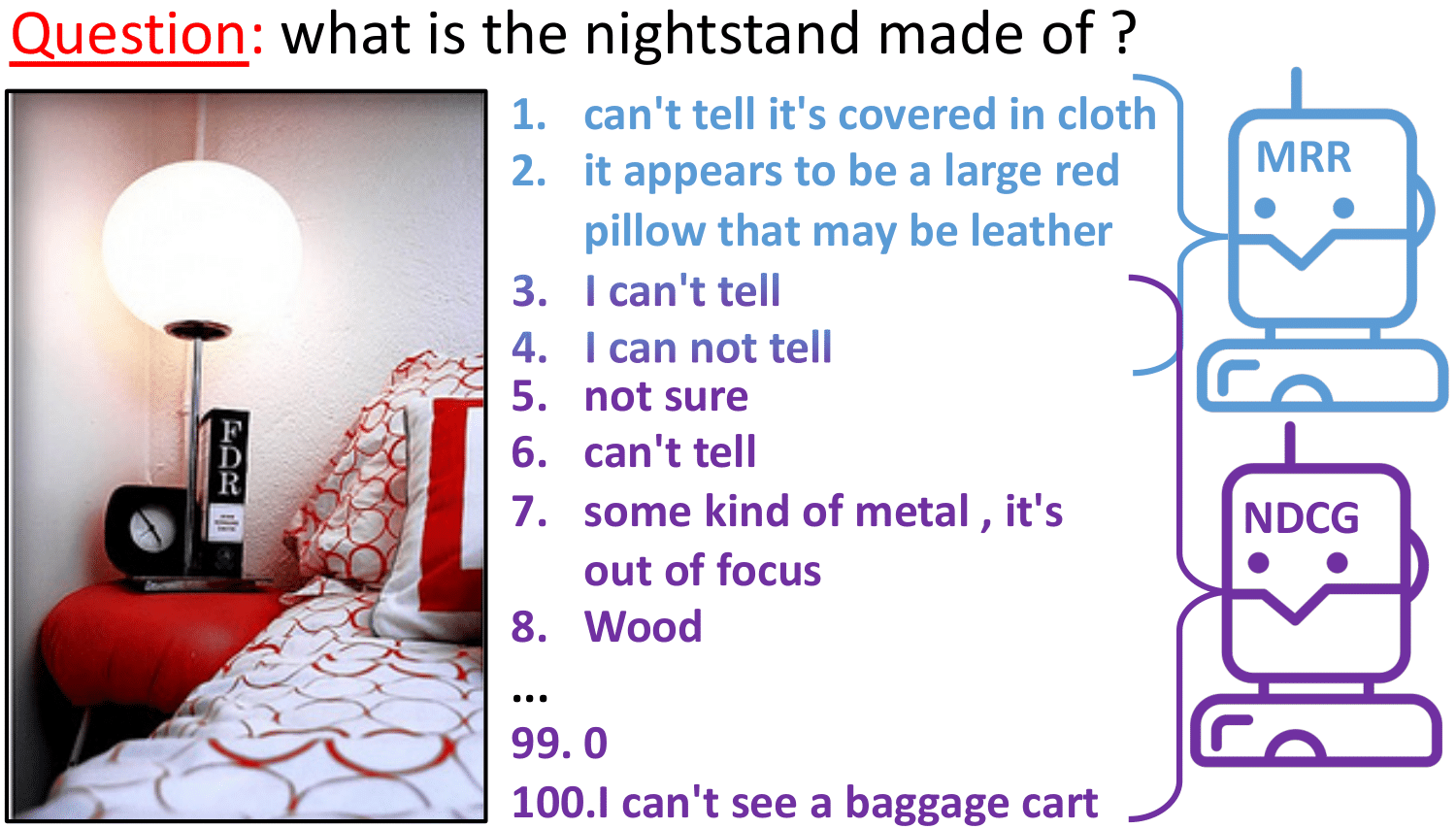

Assessing an AI agent that can converse in human language and understand visual content is challenging. Generation metrics, such as BLEU scores favor correct syntax over semantics. Hence a discriminative approach is often used, where an agent ranks a set of candidate options. The mean reciprocal rank (MRR) metric evaluates the model performance by taking into account the rank of a single human-derived answer. This approach, however, raises a new challenge: the ambiguity and synonymy of answers, for instance, semantic equivalence (e.g., `yeah' and `yes'). To address this, the normalized discounted cumulative gain (NDCG) metric has been used to capture the relevance of all the correct answers via dense annotations. However, the NDCG metric favors the usually applicable uncertain answers such as `I don't know. Crafting a model that excels on both MRR and NDCG metrics is challenging. Ideally, an AI agent should answer a human-like reply and validate the correctness of any answer. To address this issue, we describe a two-step non-parametric ranking approach that can merge strong MRR and NDCG models. Using our approach, we manage to keep most MRR state-of-the-art performance (70.41% vs. 71.24%) and the NDCG state-of-the-art performance (72.16% vs. 75.35%). Moreover, our approach won the recent Visual Dialog 2020 challenge. Source code is available at https://github.com/idansc/mrr-ndcg.

PDF Abstract NAACL 2021 PDF NAACL 2021 Abstract

VisDial

VisDial