Derivative Manipulation for General Example Weighting

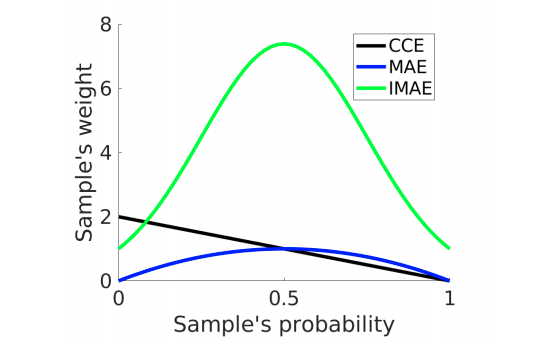

Real-world large-scale datasets usually contain noisy labels and are imbalanced. Therefore, we propose derivative manipulation (DM), a novel and general example weighting approach for training robust deep models under these adverse conditions. DM has two main merits. First, loss function and example weighting are two common techniques in robust learning. In gradient-based optimisation, the role of a loss function is to provide the gradient for back-propagation to update a model, so that the derivative magnitude of an example defines how much impact it has, namely its weight. By DM, we connect the design of loss function and example weighting together. Second, although designing a loss function sometimes has the same effect, we need to care whether a loss is differentiable, and derive its derivative to understand its example weighting scheme. They make the design complicated. Instead, DM is more flexible and straightforward by directly modifying the derivative. Concretely, DM modifies a derivative magnitude function, including transformation and normalisation, after which we term it an emphasis density function, which expresses a weighting scheme. Accordingly, diverse weighting schemes are derived from common probability density functions, including those of well-known robust losses, e.g., MAE and GCE. We conduct extensive experiments demonstrating the effectiveness of DM on both vision and language tasks.

PDF AbstractCode

Datasets

Results from the Paper

Ranked #30 on

Image Classification

on Clothing1M

(using extra training data)

Ranked #30 on

Image Classification

on Clothing1M

(using extra training data)

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

Clothing1M

Clothing1M

MARS

MARS