EasyPortrait -- Face Parsing and Portrait Segmentation Dataset

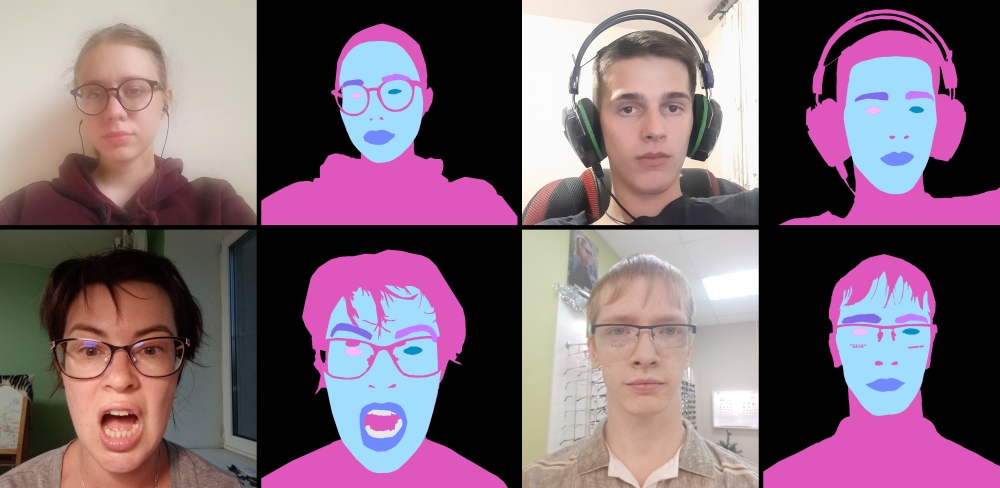

Recently, video conferencing apps have become functional by accomplishing such computer vision-based features as real-time background removal and face beautification. Limited variability in existing portrait segmentation and face parsing datasets, including head poses, ethnicity, scenes, and occlusions specific to video conferencing, motivated us to create a new dataset, EasyPortrait, for these tasks simultaneously. It contains 40,000 primarily indoor photos repeating video meeting scenarios with 13,705 unique users and fine-grained segmentation masks separated into 9 classes. Inappropriate annotation masks from other datasets caused a revision of annotator guidelines, resulting in EasyPortrait's ability to process cases, such as teeth whitening and skin smoothing. The pipeline for data mining and high-quality mask annotation via crowdsourcing is also proposed in this paper. In the ablation study experiments, we proved the importance of data quantity and diversity in head poses in our dataset for the effective learning of the model. The cross-dataset evaluation experiments confirmed the best domain generalization ability among portrait segmentation datasets. Moreover, we demonstrate the simplicity of training segmentation models on EasyPortrait without extra training tricks. The proposed dataset and trained models are publicly available.

PDF AbstractCode

Datasets

Introduced in the Paper:

EasyPortrait

EasyPortrait

Used in the Paper:

MS COCO

MS COCO

Helen

Helen

CelebAMask-HQ

CelebAMask-HQ

PPR10K

PPR10K

PP-HumanSeg14K

iBugMask

FaceOcc

PP-HumanSeg14K

iBugMask

FaceOcc