EagleEye: Fast Sub-net Evaluation for Efficient Neural Network Pruning

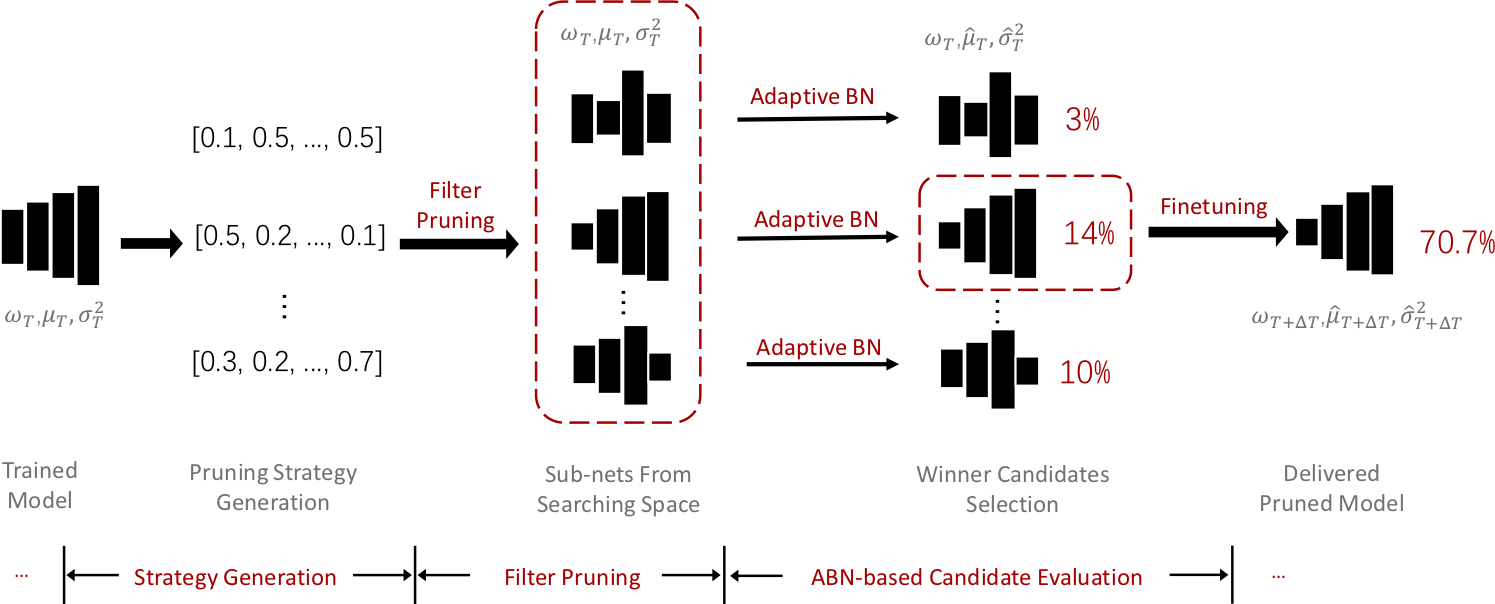

Finding out the computational redundant part of a trained Deep Neural Network (DNN) is the key question that pruning algorithms target on. Many algorithms try to predict model performance of the pruned sub-nets by introducing various evaluation methods. But they are either inaccurate or very complicated for general application. In this work, we present a pruning method called EagleEye, in which a simple yet efficient evaluation component based on adaptive batch normalization is applied to unveil a strong correlation between different pruned DNN structures and their final settled accuracy. This strong correlation allows us to fast spot the pruned candidates with highest potential accuracy without actually fine-tuning them. This module is also general to plug-in and improve some existing pruning algorithms. EagleEye achieves better pruning performance than all of the studied pruning algorithms in our experiments. Concretely, to prune MobileNet V1 and ResNet-50, EagleEye outperforms all compared methods by up to 3.8%. Even in the more challenging experiments of pruning the compact model of MobileNet V1, EagleEye achieves the highest accuracy of 70.9% with an overall 50% operations (FLOPs) pruned. All accuracy results are Top-1 ImageNet classification accuracy. Source code and models are accessible to open-source community https://github.com/anonymous47823493/EagleEye .

PDF Abstract ECCV 2020 PDF ECCV 2020 Abstract| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Network Pruning | ImageNet | ResNet50-1G FLOPs | Accuracy | 74.2 | # 11 | |

| Network Pruning | ImageNet | MobileNetV1-50% FLOPs | Accuracy | 70.7 | # 15 | |

| Network Pruning | ImageNet | ResNet50-1G FLOPs | Accuracy | 74.2 | # 11 | |

| Network Pruning | ImageNet | ResNet50-3G FLOPs | Accuracy | 77.1 | # 6 | |

| Network Pruning | ImageNet | ResNet50-2G FLOPs | Accuracy | 76.4 | # 7 |

CIFAR-10

CIFAR-10

ImageNet

ImageNet