EAdam Optimizer: How $ε$ Impact Adam

Many adaptive optimization methods have been proposed and used in deep learning, in which Adam is regarded as the default algorithm and widely used in many deep learning frameworks. Recently, many variants of Adam, such as Adabound, RAdam and Adabelief, have been proposed and show better performance than Adam. However, these variants mainly focus on changing the stepsize by making differences on the gradient or the square of it. Motivated by the fact that suitable damping is important for the success of powerful second-order optimizers, we discuss the impact of the constant $\epsilon$ for Adam in this paper. Surprisingly, we can obtain better performance than Adam simply changing the position of $\epsilon$. Based on this finding, we propose a new variant of Adam called EAdam, which doesn't need extra hyper-parameters or computational costs. We also discuss the relationships and differences between our method and Adam. Finally, we conduct extensive experiments on various popular tasks and models. Experimental results show that our method can bring significant improvement compared with Adam. Our code is available at https://github.com/yuanwei2019/EAdam-optimizer.

PDF Abstract

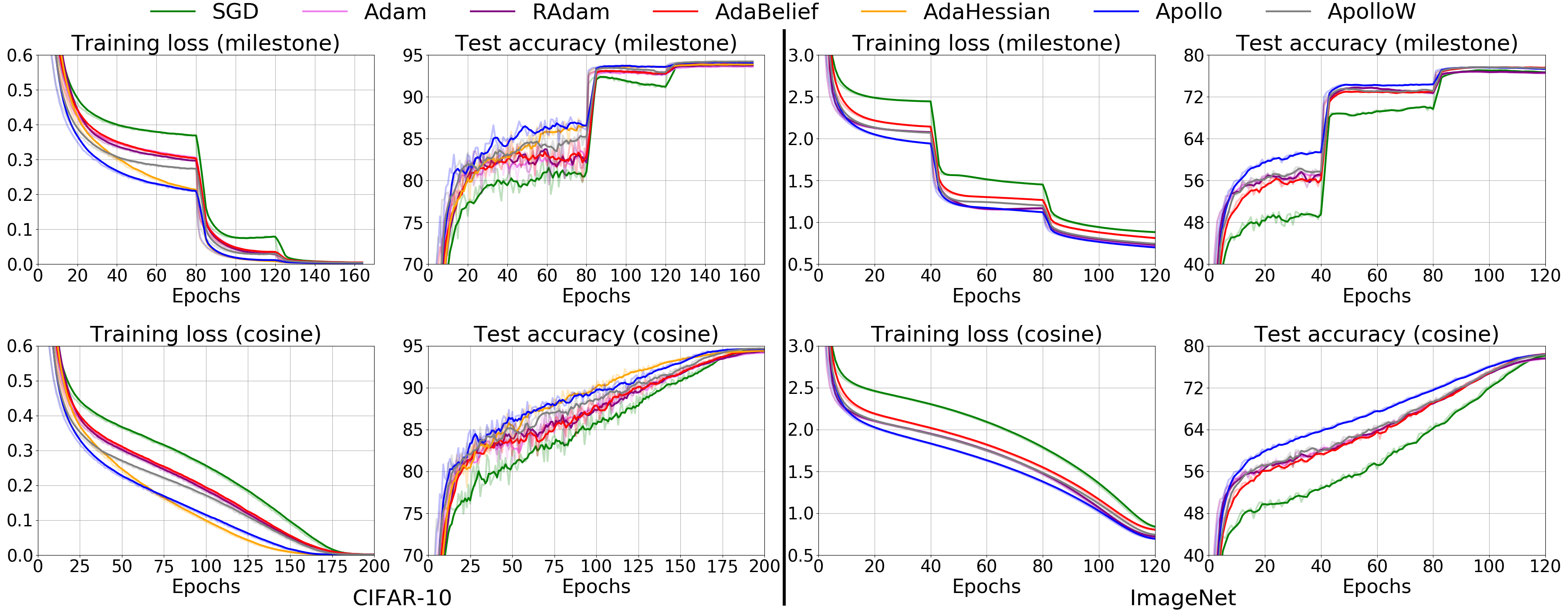

CIFAR-10

CIFAR-10

CIFAR-100

CIFAR-100

Penn Treebank

Penn Treebank