Distilling Knowledge from Refinement in Multiple Instance Detection Networks

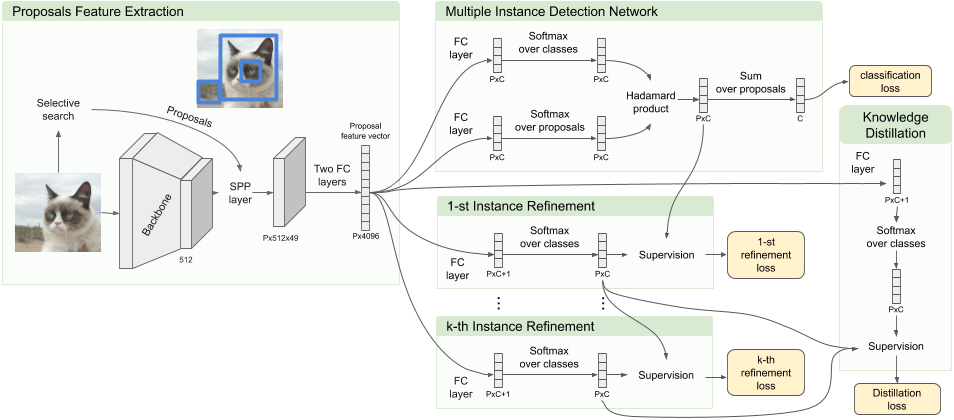

Weakly supervised object detection (WSOD) aims to tackle the object detection problem using only labeled image categories as supervision. A common approach used in WSOD to deal with the lack of localization information is Multiple Instance Learning, and in recent years methods started adopting Multiple Instance Detection Networks (MIDN), which allows training in an end-to-end fashion. In general, these methods work by selecting the best instance from a pool of candidates and then aggregating other instances based on similarity. In this work, we claim that carefully selecting the aggregation criteria can considerably improve the accuracy of the learned detector. We start by proposing an additional refinement step to an existing approach (OICR), which we call refinement knowledge distillation. Then, we present an adaptive supervision aggregation function that dynamically changes the aggregation criteria for selecting boxes related to one of the ground-truth classes, background, or even ignored during the generation of each refinement module supervision. Experiments in Pascal VOC 2007 demonstrate that our Knowledge Distillation and smooth aggregation function significantly improves the performance of OICR in the weakly supervised object detection and weakly supervised object localization tasks. These improvements make the Boosted-OICR competitive again versus other state-of-the-art approaches.

PDF Abstract