Discriminative Consensus Mining with A Thousand Groups for More Accurate Co-Salient Object Detection

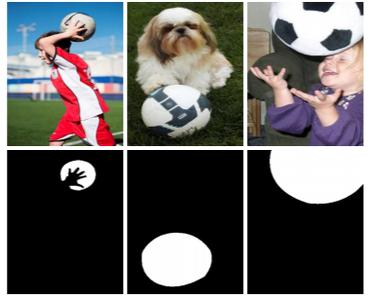

Co-Salient Object Detection (CoSOD) is a rapidly growing task, extended from Salient Object Detection (SOD) and Common Object Segmentation (Co-Segmentation). It is aimed at detecting the co-occurring salient object in the given image group. Many effective approaches have been proposed on the basis of existing datasets. However, there is still no standard and efficient training set in CoSOD, which makes it chaotic to choose training sets in the recently proposed CoSOD methods. First, the drawbacks of existing training sets in CoSOD are analyzed in a comprehensive way, and potential improvements are provided to solve existing problems to some extent. In particular, in this thesis, a new CoSOD training set is introduced, named Co-Saliency of ImageNet (CoSINe) dataset. The proposed CoSINe is the largest number of groups among all existing CoSOD datasets. The images obtained here span a wide variety in terms of categories, object sizes, etc. In experiments, models trained on CoSINe can achieve significantly better performance with fewer images compared to all existing datasets. Second, to make the most of the proposed CoSINe, a novel CoSOD approach named Hierarchical Instance-aware COnsensus MinEr (HICOME) is proposed, which efficiently mines the consensus feature from different feature levels and discriminates objects of different classes in an object-aware contrastive way. As extensive experiments show, the proposed HICOME achieves SoTA performance on all the existing CoSOD test sets. Several useful training tricks suitable for training CoSOD models are also provided. Third, practical applications are given using the CoSOD technique to show the effectiveness. Finally, the remaining challenges and potential improvements of CoSOD are discussed to inspire related work in the future. The source code, the dataset, and the online demo will be publicly available at github.com/ZhengPeng7/CoSINe.

PDF Abstract

ImageNet

ImageNet

MS COCO

MS COCO

DUTS

DUTS

COD10K

COD10K

CoSal2015

CoSal2015