DIRV: Dense Interaction Region Voting for End-to-End Human-Object Interaction Detection

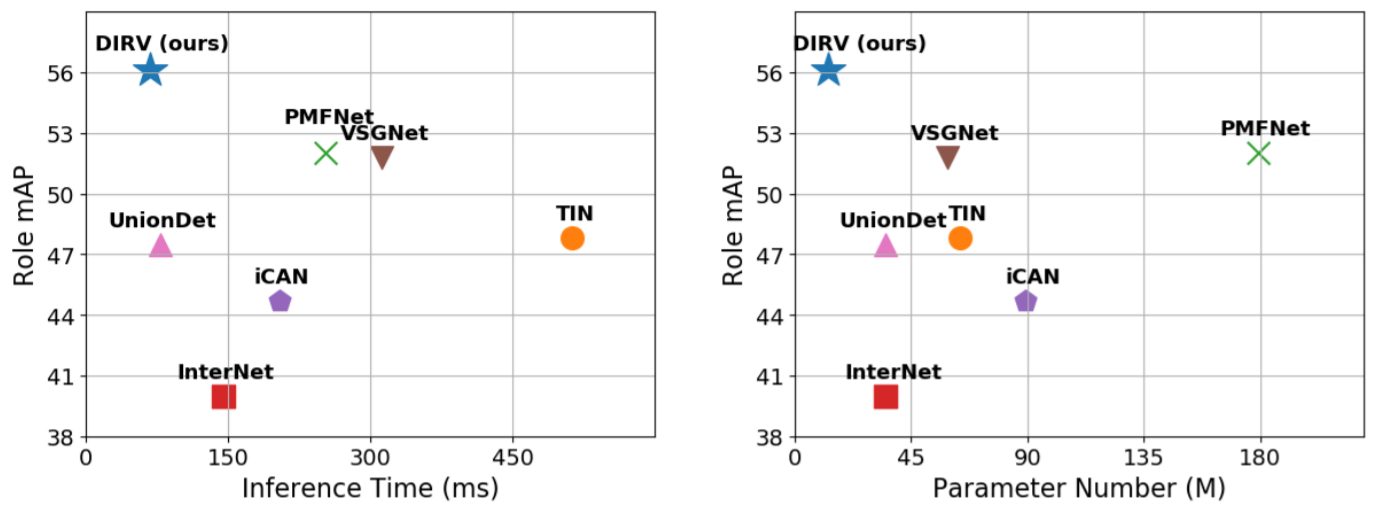

Recent years, human-object interaction (HOI) detection has achieved impressive advances. However, conventional two-stage methods are usually slow in inference. On the other hand, existing one-stage methods mainly focus on the union regions of interactions, which introduce unnecessary visual information as disturbances to HOI detection. To tackle the problems above, we propose a novel one-stage HOI detection approach DIRV in this paper, based on a new concept called interaction region for the HOI problem. Unlike previous methods, our approach concentrates on the densely sampled interaction regions across different scales for each human-object pair, so as to capture the subtle visual features that is most essential to the interaction. Moreover, in order to compensate for the detection flaws of a single interaction region, we introduce a novel voting strategy that makes full use of those overlapped interaction regions in place of conventional Non-Maximal Suppression (NMS). Extensive experiments on two popular benchmarks: V-COCO and HICO-DET show that our approach outperforms existing state-of-the-arts by a large margin with the highest inference speed and lightest network architecture. We achieved 56.1 mAP on V-COCO without addtional input. Our code is publicly available at: https://github.com/MVIG-SJTU/DIRV

PDF Abstract

MS COCO

MS COCO

HICO-DET

HICO-DET

V-COCO

V-COCO