Direction Concentration Learning: Enhancing Congruency in Machine Learning

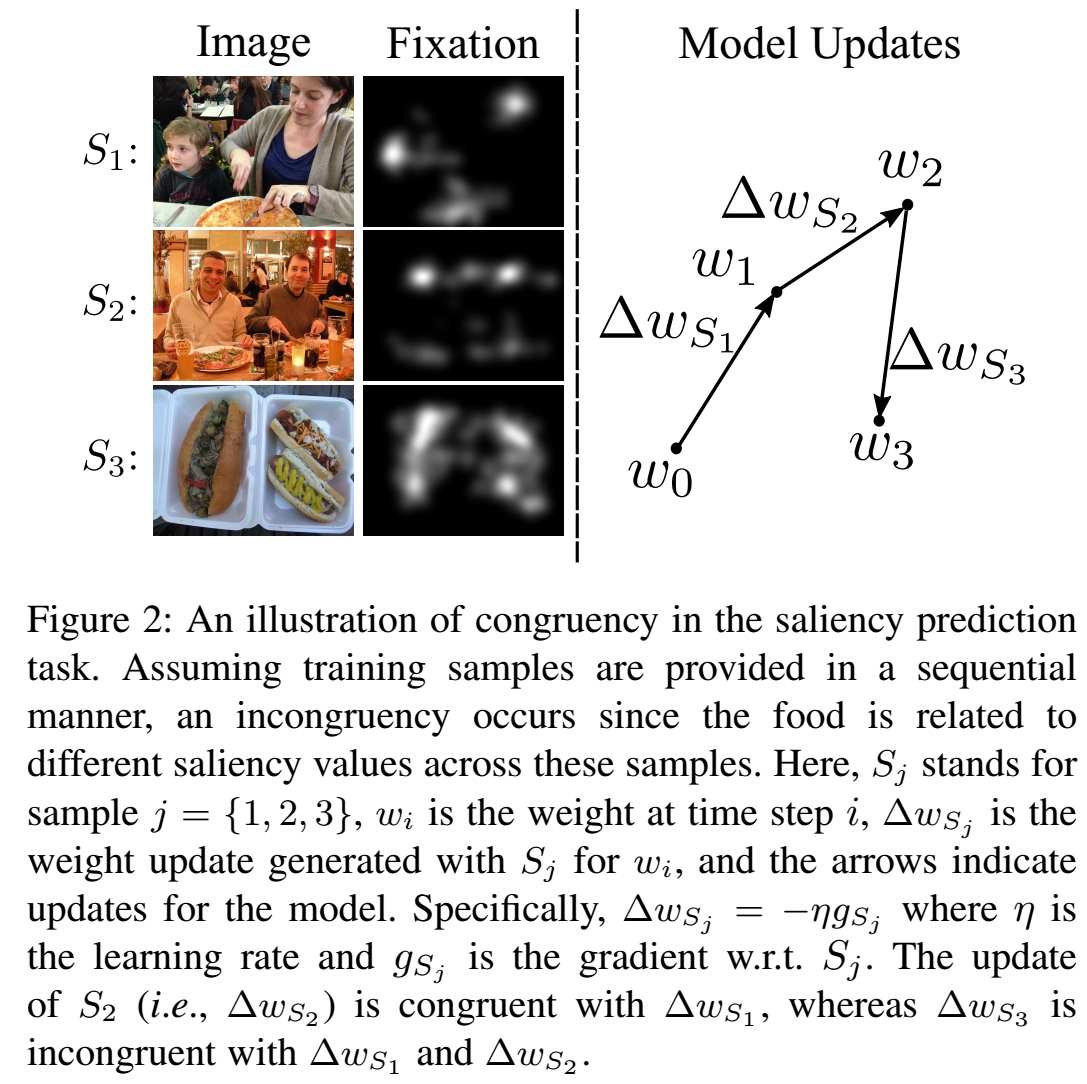

One of the well-known challenges in computer vision tasks is the visual diversity of images, which could result in an agreement or disagreement between the learned knowledge and the visual content exhibited by the current observation. In this work, we first define such an agreement in a concepts learning process as congruency. Formally, given a particular task and sufficiently large dataset, the congruency issue occurs in the learning process whereby the task-specific semantics in the training data are highly varying. We propose a Direction Concentration Learning (DCL) method to improve congruency in the learning process, where enhancing congruency influences the convergence path to be less circuitous. The experimental results show that the proposed DCL method generalizes to state-of-the-art models and optimizers, as well as improves the performances of saliency prediction task, continual learning task, and classification task. Moreover, it helps mitigate the catastrophic forgetting problem in the continual learning task. The code is publicly available at https://github.com/luoyan407/congruency.

PDF AbstractCode

Results from the Paper

Ranked #8 on

Image Classification

on Tiny ImageNet Classification

(using extra training data)

Ranked #8 on

Image Classification

on Tiny ImageNet Classification

(using extra training data)

CIFAR-10

CIFAR-10

ImageNet

ImageNet

CIFAR-100

CIFAR-100

MNIST

MNIST

Tiny ImageNet

Tiny ImageNet

SALICON

SALICON