DeLighT: Deep and Light-weight Transformer

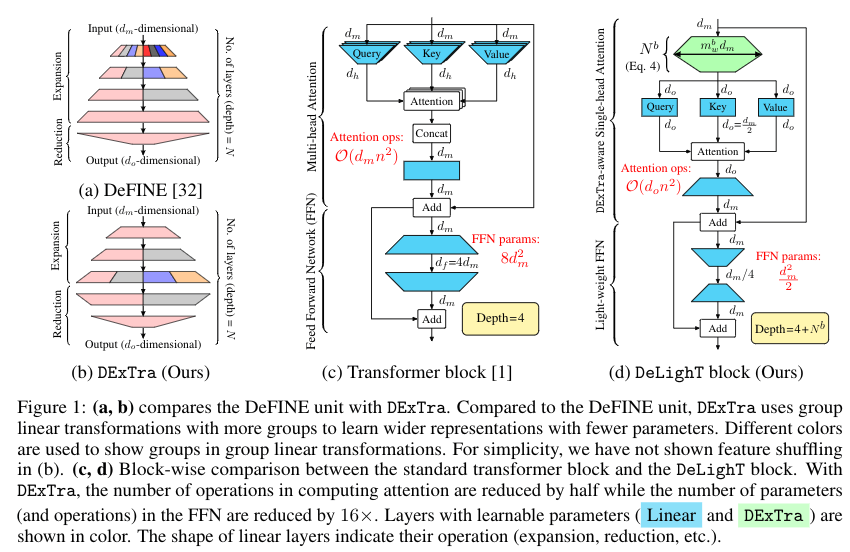

We introduce a deep and light-weight transformer, DeLighT, that delivers similar or better performance than standard transformer-based models with significantly fewer parameters. DeLighT more efficiently allocates parameters both (1) within each Transformer block using the DeLighT transformation, a deep and light-weight transformation, and (2) across blocks using block-wise scaling, which allows for shallower and narrower DeLighT blocks near the input and wider and deeper DeLighT blocks near the output. Overall, DeLighT networks are 2.5 to 4 times deeper than standard transformer models and yet have fewer parameters and operations. Experiments on benchmark machine translation and language modeling tasks show that DeLighT matches or improves the performance of baseline Transformers with 2 to 3 times fewer parameters on average. Our source code is available at: \url{https://github.com/sacmehta/delight}

PDF Abstract ICLR 2021 PDF ICLR 2021 AbstractCode

Datasets

Results from the Paper

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Machine Translation | IWSLT2014 German-English | DeLighT | BLEU score | 35.3 | # 22 | |

| Language Modelling | WikiText-103 | DeLighT | Test perplexity | 24.14 | # 55 | |

| Number of params | 99M | # 42 | ||||

| Machine Translation | WMT2016 English-French | DeLighT | BLEU score | 40.5 | # 1 | |

| Machine Translation | WMT2016 English-German | DeLighT | BLEU score | 28.0 | # 4 | |

| Machine Translation | WMT2016 English-Romanian | DeLighT | BLEU score | 34.7 | # 1 |

WikiText-2

WikiText-2

WikiText-103

WikiText-103

WMT 2016

WMT 2016

WMT 2016 News

WMT 2016 News