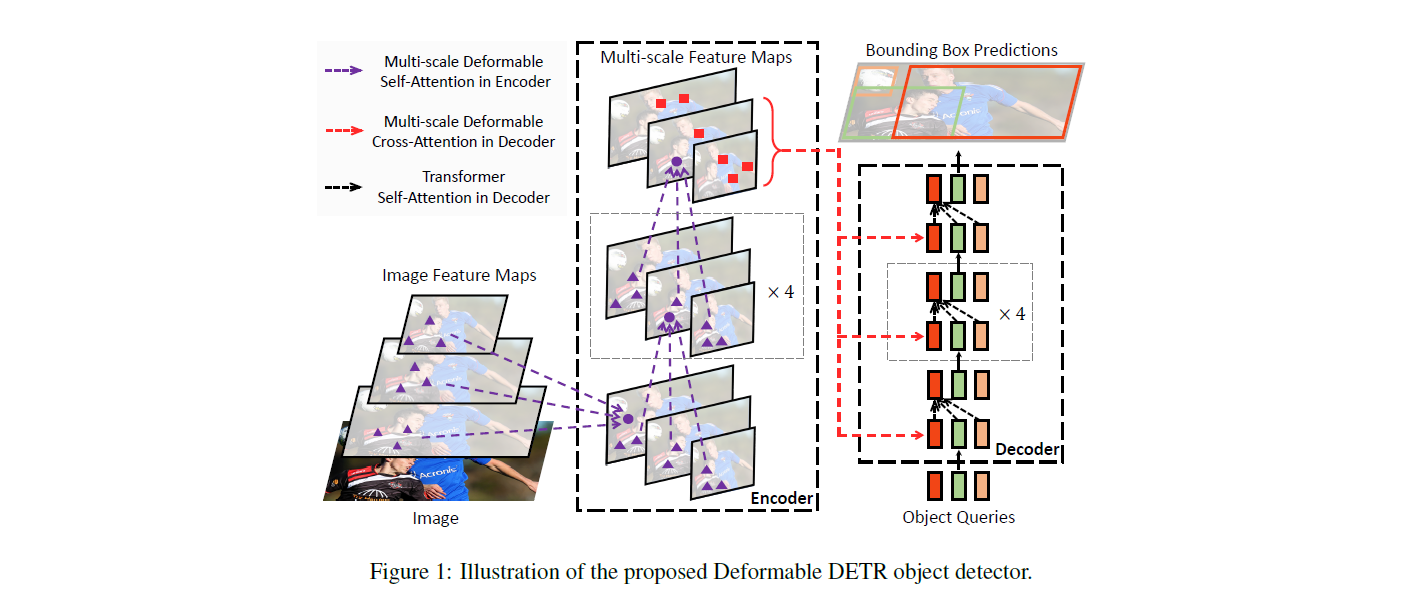

Deformable DETR: Deformable Transformers for End-to-End Object Detection

DETR has been recently proposed to eliminate the need for many hand-designed components in object detection while demonstrating good performance. However, it suffers from slow convergence and limited feature spatial resolution, due to the limitation of Transformer attention modules in processing image feature maps. To mitigate these issues, we proposed Deformable DETR, whose attention modules only attend to a small set of key sampling points around a reference. Deformable DETR can achieve better performance than DETR (especially on small objects) with 10 times less training epochs. Extensive experiments on the COCO benchmark demonstrate the effectiveness of our approach. Code is released at https://github.com/fundamentalvision/Deformable-DETR.

PDF Abstract ICLR 2021 PDF ICLR 2021 Abstract

MS COCO

MS COCO

COCO-O

COCO-O

SARDet-100K

SARDet-100K