deepsing: Generating Sentiment-aware Visual Stories using Cross-modal Music Translation

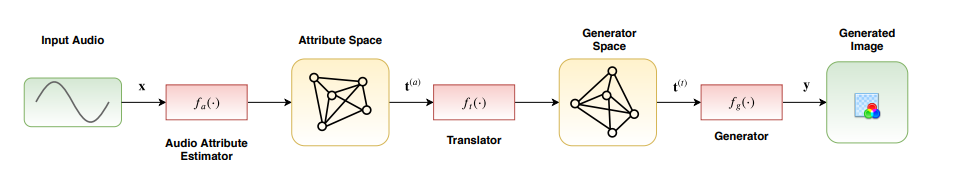

In this paper we propose a deep learning method for performing attributed-based music-to-image translation. The proposed method is applied for synthesizing visual stories according to the sentiment expressed by songs. The generated images aim to induce the same feelings to the viewers, as the original song does, reinforcing the primary aim of music, i.e., communicating feelings. The process of music-to-image translation poses unique challenges, mainly due to the unstable mapping between the different modalities involved in this process. In this paper, we employ a trainable cross-modal translation method to overcome this limitation, leading to the first, to the best of our knowledge, deep learning method for generating sentiment-aware visual stories. Various aspects of the proposed method are extensively evaluated and discussed using different songs.

PDF Abstract