Curriculum-style Local-to-global Adaptation for Cross-domain Remote Sensing Image Segmentation

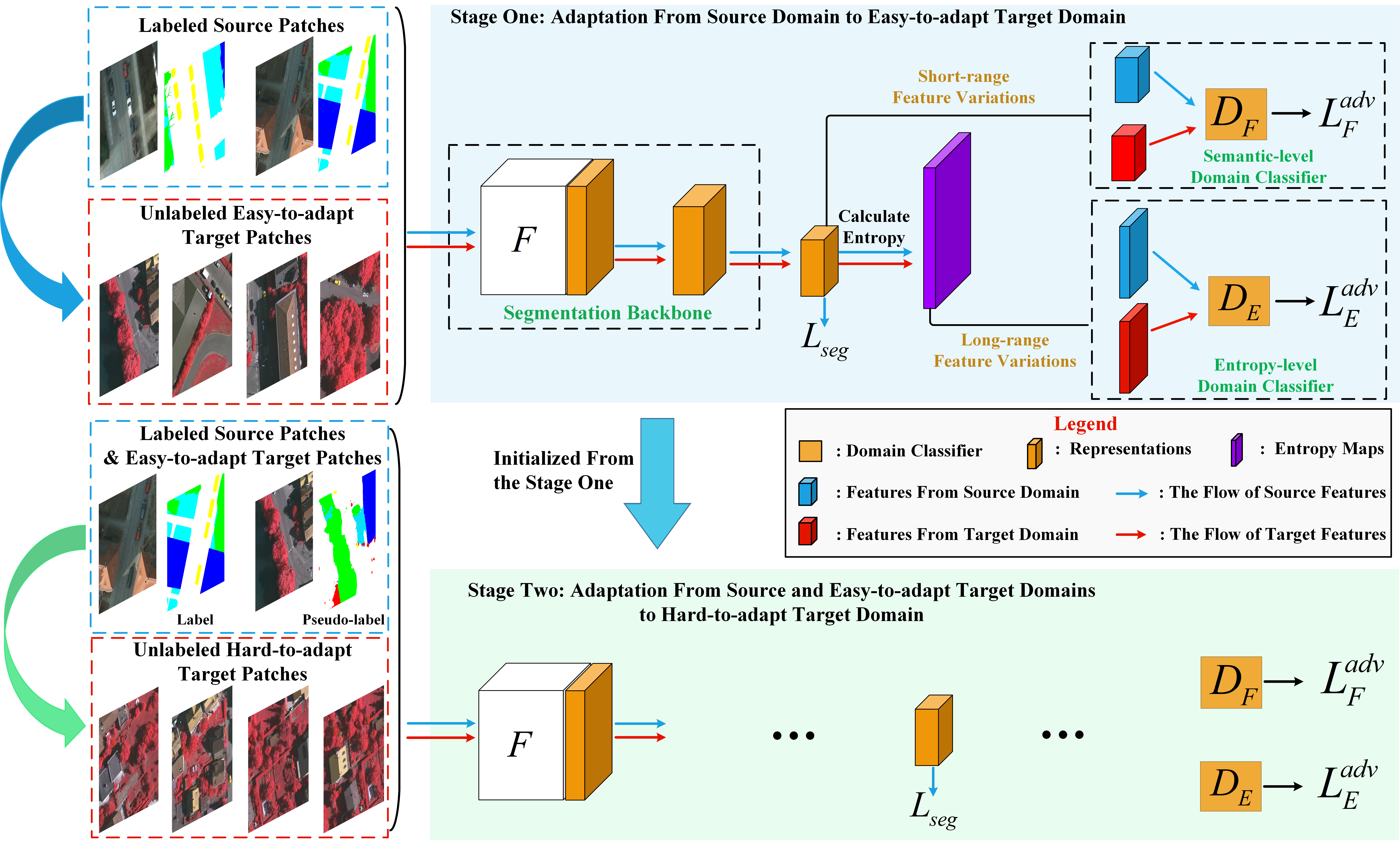

Although domain adaptation has been extensively studied in natural image-based segmentation task, the research on cross-domain segmentation for very high resolution (VHR) remote sensing images (RSIs) still remains underexplored. The VHR RSIs-based cross-domain segmentation mainly faces two critical challenges: 1) Large area land covers with many diverse object categories bring severe local patch-level data distribution deviations, thus yielding different adaptation difficulties for different local patches; 2) Different VHR sensor types or dynamically changing modes cause the VHR images to go through intensive data distribution differences even for the same geographical location, resulting in different global feature-level domain gap. To address these challenges, we propose a curriculum-style local-to-global cross-domain adaptation framework for the segmentation of VHR RSIs. The proposed curriculum-style adaptation performs the adaptation process in an easy-to-hard way according to the adaptation difficulties that can be obtained using an entropy-based score for each patch of the target domain, and thus well aligns the local patches in a domain image. The proposed local-to-global adaptation performs the feature alignment process from the locally semantic to globally structural feature discrepancies, and consists of a semantic-level domain classifier and an entropy-level domain classifier that can reduce the above cross-domain feature discrepancies. Extensive experiments have been conducted in various cross-domain scenarios, including geographic location variations and imaging mode variations, and the experimental results demonstrate that the proposed method can significantly boost the domain adaptability of segmentation networks for VHR RSIs. Our code is available at: https://github.com/BOBrown/CCDA_LGFA.

PDF Abstract

ISPRS Potsdam

ISPRS Potsdam

ISPRS Vaihingen

ISPRS Vaihingen