CRS-Diff: Controllable Generative Remote Sensing Foundation Model

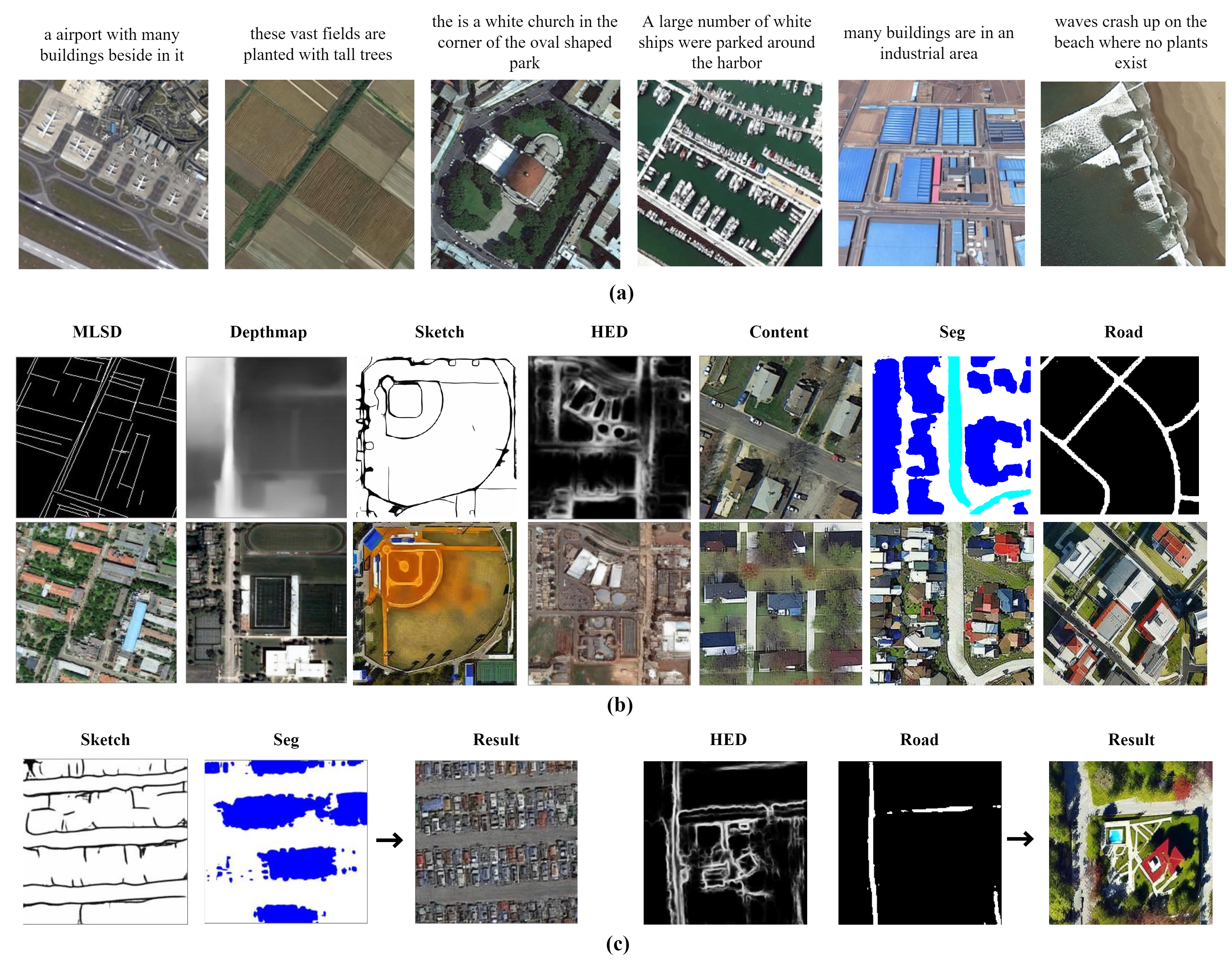

The emergence of diffusion models has revolutionized the field of image generation, providing new methods for creating high-quality, high-resolution images across various applications. However, the potential of these models for generating domain-specific images, particularly remote sensing (RS) images, remains largely untapped. RS images that are notable for their high resolution, extensive coverage, and rich information content, bring new challenges that general diffusion models may not adequately address. This paper proposes CRS-Diff, a pioneering diffusion modeling framework specifically tailored for generating remote sensing imagery, leveraging the inherent advantages of diffusion models while integrating advanced control mechanisms to ensure that the imagery is not only visually clear but also enriched with geographic and temporal information. The model integrates global and local control inputs, enabling precise combinations of generation conditions to refine the generation process. A comprehensive evaluation of CRS-Diff has demonstrated its superior capability to generate RS imagery both in a single condition and multiple conditions compared with previous methods in terms of image quality and diversity.

PDF Abstract

fMoW

fMoW

RSICD

RSICD

Million-AID

Million-AID