CoSSL: Co-Learning of Representation and Classifier for Imbalanced Semi-Supervised Learning

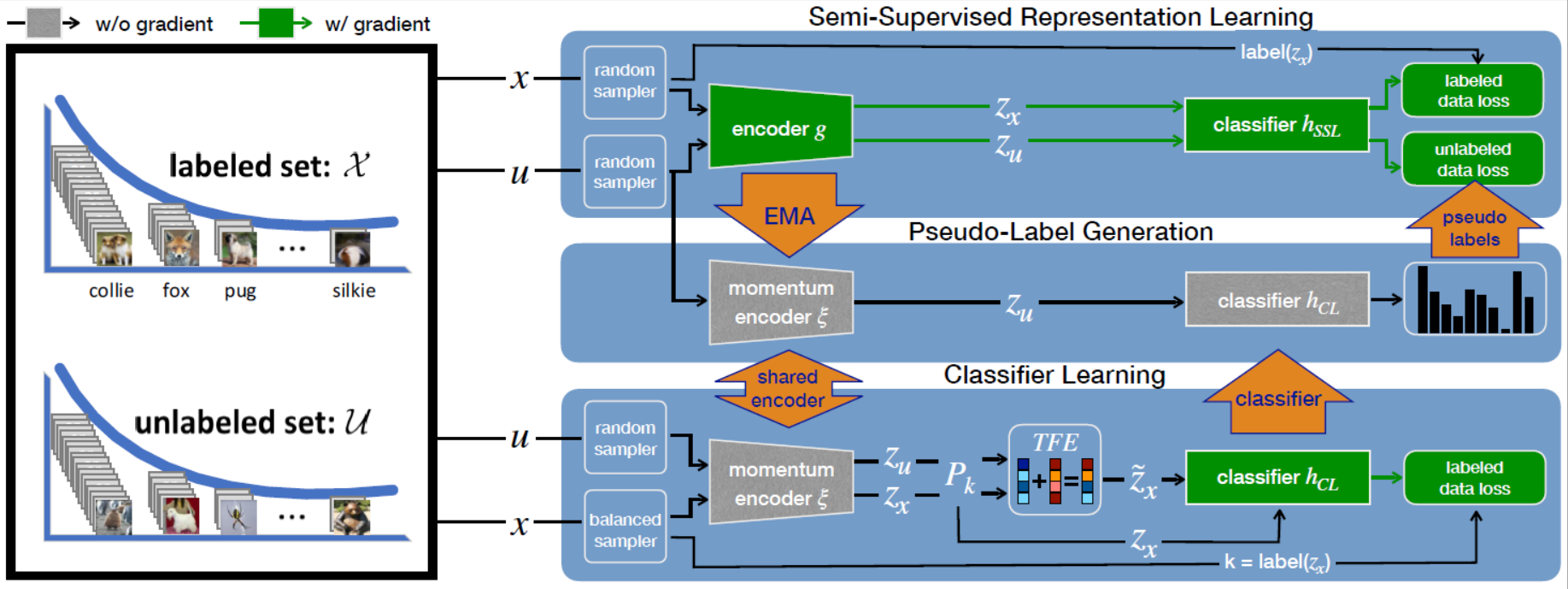

In this paper, we propose a novel co-learning framework (CoSSL) with decoupled representation learning and classifier learning for imbalanced SSL. To handle the data imbalance, we devise Tail-class Feature Enhancement (TFE) for classifier learning. Furthermore, the current evaluation protocol for imbalanced SSL focuses only on balanced test sets, which has limited practicality in real-world scenarios. Therefore, we further conduct a comprehensive evaluation under various shifted test distributions. In experiments, we show that our approach outperforms other methods over a large range of shifted distributions, achieving state-of-the-art performance on benchmark datasets ranging from CIFAR-10, CIFAR-100, ImageNet, to Food-101. Our code will be made publicly available.

PDF Abstract CVPR 2022 PDF CVPR 2022 Abstract

ImageNet

ImageNet

Food-101

Food-101