Context-Aware Visual Compatibility Prediction

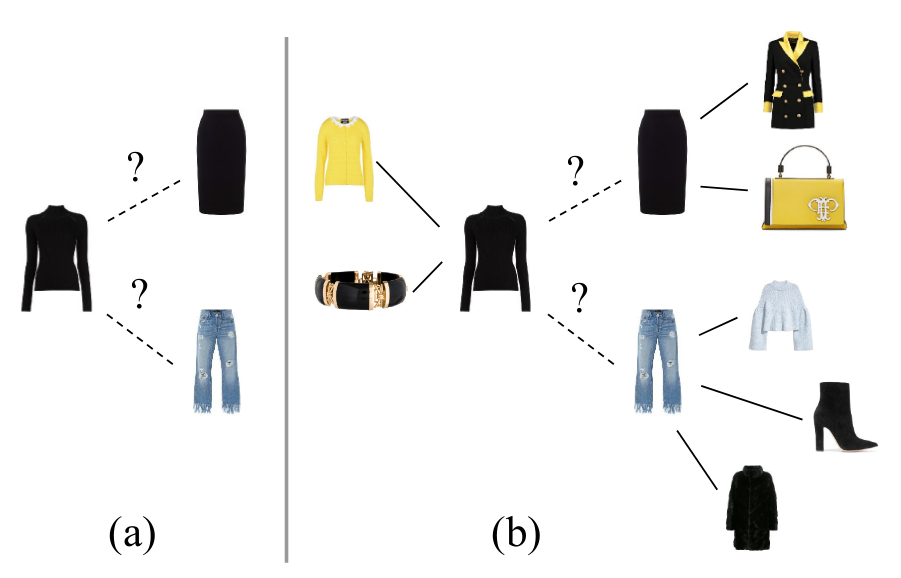

How do we determine whether two or more clothing items are compatible or visually appealing? Part of the answer lies in understanding of visual aesthetics, and is biased by personal preferences shaped by social attitudes, time, and place. In this work we propose a method that predicts compatibility between two items based on their visual features, as well as their context. We define context as the products that are known to be compatible with each of these item. Our model is in contrast to other metric learning approaches that rely on pairwise comparisons between item features alone. We address the compatibility prediction problem using a graph neural network that learns to generate product embeddings conditioned on their context. We present results for two prediction tasks (fill in the blank and outfit compatibility) tested on two fashion datasets Polyvore and Fashion-Gen, and on a subset of the Amazon dataset; we achieve state of the art results when using context information and show how test performance improves as more context is used.

PDF Abstract CVPR 2019 PDF CVPR 2019 Abstract

Polyvore

Polyvore

Fashion-Gen

Fashion-Gen