Bottom-Up Temporal Action Localization with Mutual Regularization

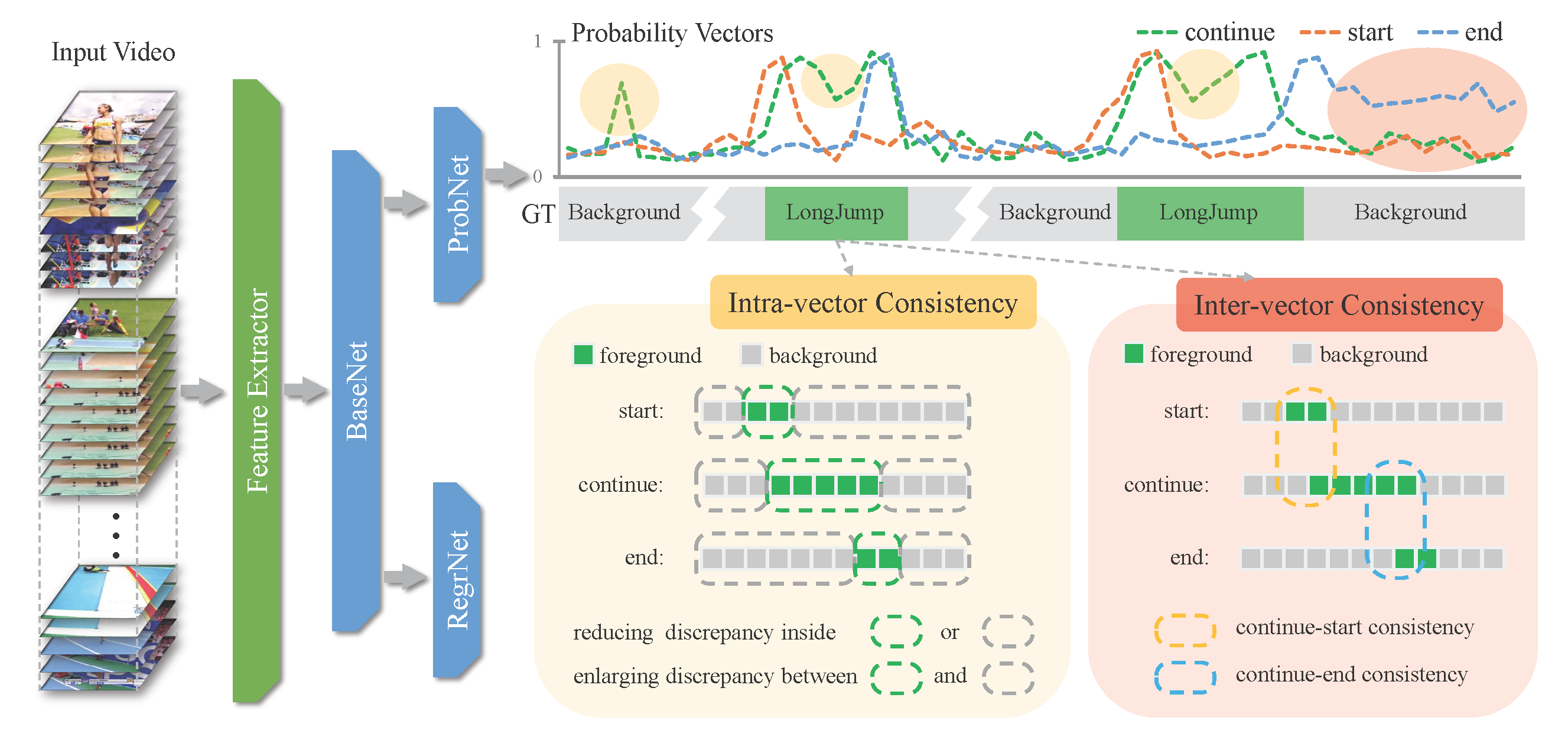

Recently, temporal action localization (TAL), i.e., finding specific action segments in untrimmed videos, has attracted increasing attentions of the computer vision community. State-of-the-art solutions for TAL involves evaluating the frame-level probabilities of three action-indicating phases, i.e. starting, continuing, and ending; and then post-processing these predictions for the final localization. This paper delves deep into this mechanism, and argues that existing methods, by modeling these phases as individual classification tasks, ignored the potential temporal constraints between them. This can lead to incorrect and/or inconsistent predictions when some frames of the video input lack sufficient discriminative information. To alleviate this problem, we introduce two regularization terms to mutually regularize the learning procedure: the Intra-phase Consistency (IntraC) regularization is proposed to make the predictions verified inside each phase; and the Inter-phase Consistency (InterC) regularization is proposed to keep consistency between these phases. Jointly optimizing these two terms, the entire framework is aware of these potential constraints during an end-to-end optimization process. Experiments are performed on two popular TAL datasets, THUMOS14 and ActivityNet1.3. Our approach clearly outperforms the baseline both quantitatively and qualitatively. The proposed regularization also generalizes to other TAL methods (e.g., TSA-Net and PGCN). code: https://github.com/PeisenZhao/Bottom-Up-TAL-with-MR

PDF Abstract ECCV 2020 PDF ECCV 2020 Abstract

ActivityNet

ActivityNet