Conditional Generative Refinement Adversarial Networks for Unbalanced Medical Image Semantic Segmentation

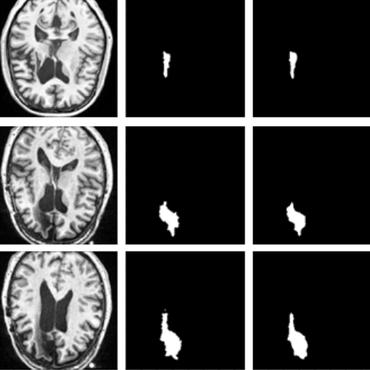

We propose a new generative adversarial architecture to mitigate imbalance data problem in medical image semantic segmentation where the majority of pixels belongs to a healthy region and few belong to lesion or non-health region. A model trained with imbalanced data tends to bias toward healthy data which is not desired in clinical applications and predicted outputs by these networks have high precision and low sensitivity. We propose a new conditional generative refinement network with three components: a generative, a discriminative, and a refinement network to mitigate unbalanced data problem through ensemble learning. The generative network learns to a segment at the pixel level by getting feedback from the discriminative network according to the true positive and true negative maps. On the other hand, the refinement network learns to predict the false positive and the false negative masks produced by the generative network that has significant value, especially in medical application. The final semantic segmentation masks are then composed by the output of the three networks. The proposed architecture shows state-of-the-art results on LiTS-2017 for liver lesion segmentation, and two microscopic cell segmentation datasets MDA231, PhC-HeLa. We have achieved competitive results on BraTS-2017 for brain tumour segmentation.

PDF Abstract

BraTS 2017

BraTS 2017