CLIPSelf: Vision Transformer Distills Itself for Open-Vocabulary Dense Prediction

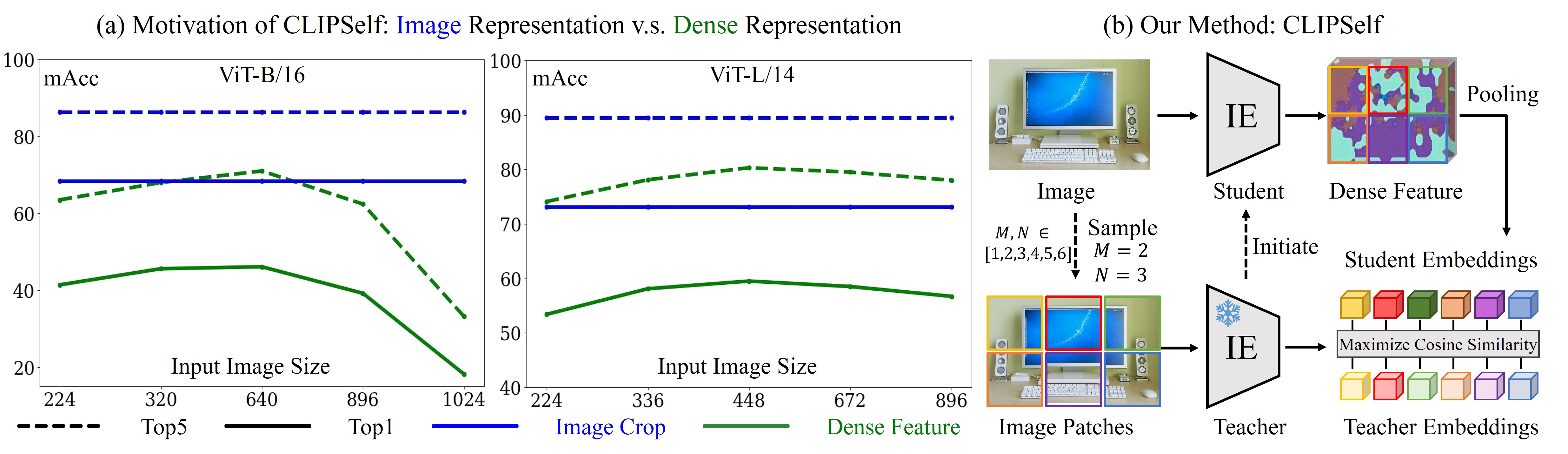

Open-vocabulary dense prediction tasks including object detection and image segmentation have been advanced by the success of Contrastive Language-Image Pre-training (CLIP). CLIP models, particularly those incorporating vision transformers (ViTs), have exhibited remarkable generalization ability in zero-shot image classification. However, when transferring the vision-language alignment of CLIP from global image representation to local region representation for the open-vocabulary dense prediction tasks, CLIP ViTs suffer from the domain shift from full images to local image regions. In this paper, we embark on an in-depth analysis of the region-language alignment in CLIP models, which is essential for downstream open-vocabulary dense prediction tasks. Subsequently, we propose an approach named CLIPSelf, which adapts the image-level recognition ability of CLIP ViT to local image regions without needing any region-text pairs. CLIPSelf empowers ViTs to distill itself by aligning a region representation extracted from its dense feature map with the image-level representation of the corresponding image crop. With the enhanced CLIP ViTs, we achieve new state-of-the-art performance on open-vocabulary object detection, semantic segmentation, and panoptic segmentation across various benchmarks. Models and code are released at https://github.com/wusize/CLIPSelf.

PDF AbstractCode

Datasets

Results from the Paper

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Open Vocabulary Panoptic Segmentation | ADE20K | CLIPSelf | PQ | 23.7 | # 3 | |

| Open Vocabulary Semantic Segmentation | ADE20K-150 | CLIPSelf | mIoU | 34.5 | # 4 | |

| Open Vocabulary Semantic Segmentation | ADE20K-847 | CLIPSelf | mIoU | 12.4 | # 8 | |

| Open Vocabulary Object Detection | LVIS v1.0 | CLIPSelf | AP novel-LVIS base training | 34.9 | # 3 | |

| Open Vocabulary Object Detection | MSCOCO | CLIPSelf | AP 0.5 | 44.3 | # 5 | |

| Open Vocabulary Semantic Segmentation | PASCAL Context-59 | CLIPSelf | mIoU | 62.3 | # 3 |

MS COCO

MS COCO

ADE20K

ADE20K

LVIS

LVIS

PASCAL Context

PASCAL Context