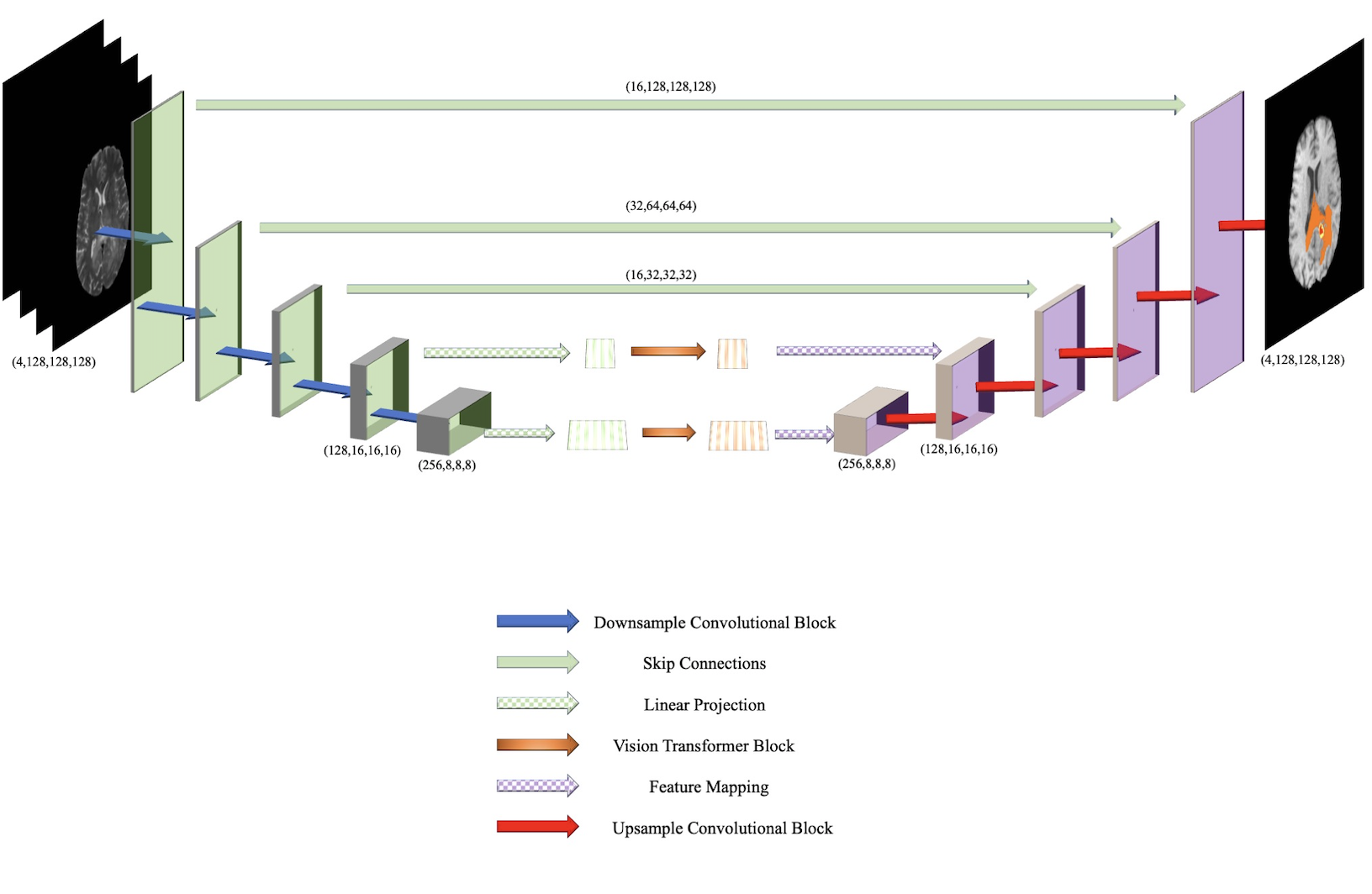

BiTr-Unet: a CNN-Transformer Combined Network for MRI Brain Tumor Segmentation

Convolutional neural networks (CNNs) have achieved remarkable success in automatically segmenting organs or lesions on 3D medical images. Recently, vision transformer networks have exhibited exceptional performance in 2D image classification tasks. Compared with CNNs, transformer networks have an appealing advantage of extracting long-range features due to their self-attention algorithm. Therefore, we propose a CNN-Transformer combined model, called BiTr-Unet, with specific modifications for brain tumor segmentation on multi-modal MRI scans. Our BiTr-Unet achieves good performance on the BraTS2021 validation dataset with median Dice score 0.9335, 0.9304 and 0.8899, and median Hausdorff distance 2.8284, 2.2361 and 1.4142 for the whole tumor, tumor core, and enhancing tumor, respectively. On the BraTS2021 testing dataset, the corresponding results are 0.9257, 0.9350 and 0.8874 for Dice score, and 3, 2.2361 and 1.4142 for Hausdorff distance. The code is publicly available at https://github.com/JustaTinyDot/BiTr-Unet.

PDF Abstract