Benchmarking Robustness in Object Detection: Autonomous Driving when Winter is Coming

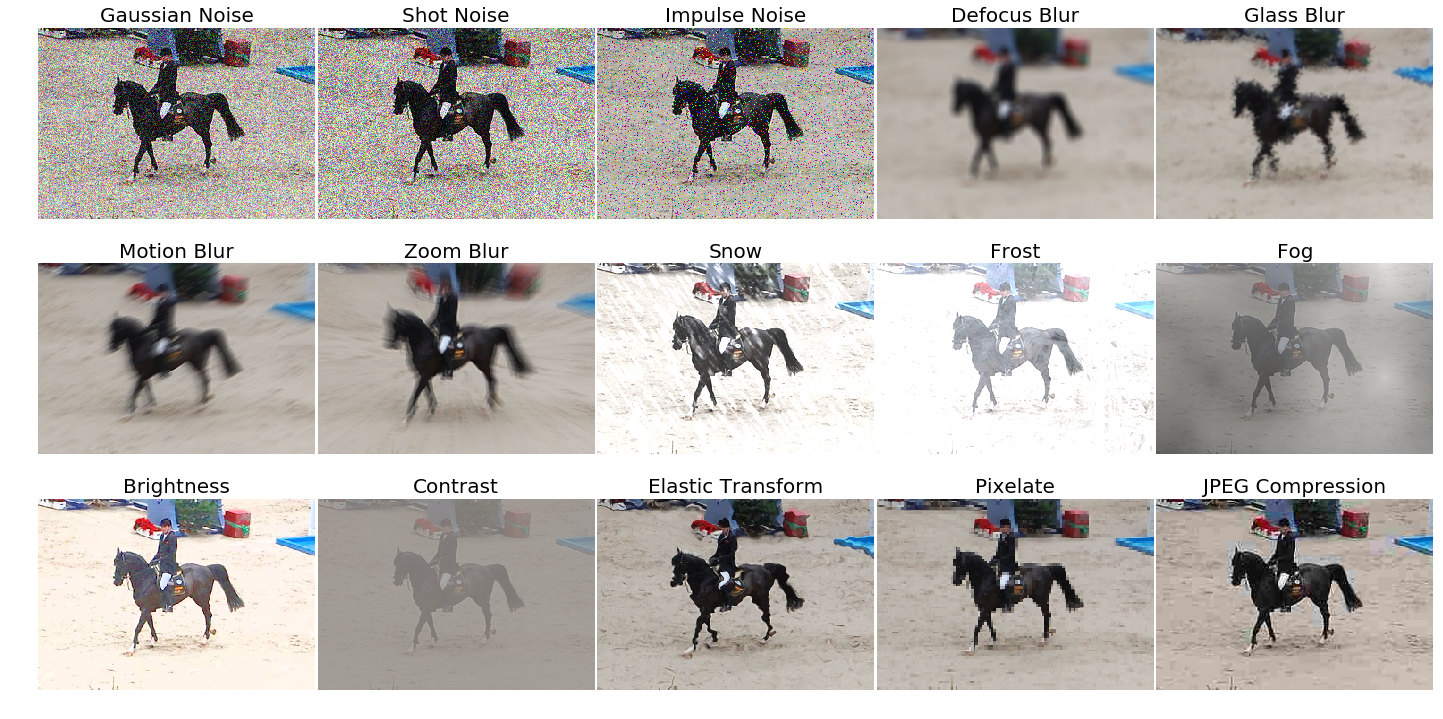

The ability to detect objects regardless of image distortions or weather conditions is crucial for real-world applications of deep learning like autonomous driving. We here provide an easy-to-use benchmark to assess how object detection models perform when image quality degrades. The three resulting benchmark datasets, termed Pascal-C, Coco-C and Cityscapes-C, contain a large variety of image corruptions. We show that a range of standard object detection models suffer a severe performance loss on corrupted images (down to 30--60\% of the original performance). However, a simple data augmentation trick---stylizing the training images---leads to a substantial increase in robustness across corruption type, severity and dataset. We envision our comprehensive benchmark to track future progress towards building robust object detection models. Benchmark, code and data are publicly available.

PDF Abstract

MS COCO

MS COCO

Cityscapes

Cityscapes

PASCAL VOC 2007

PASCAL VOC 2007