Masking Augmentation for Supervised Learning

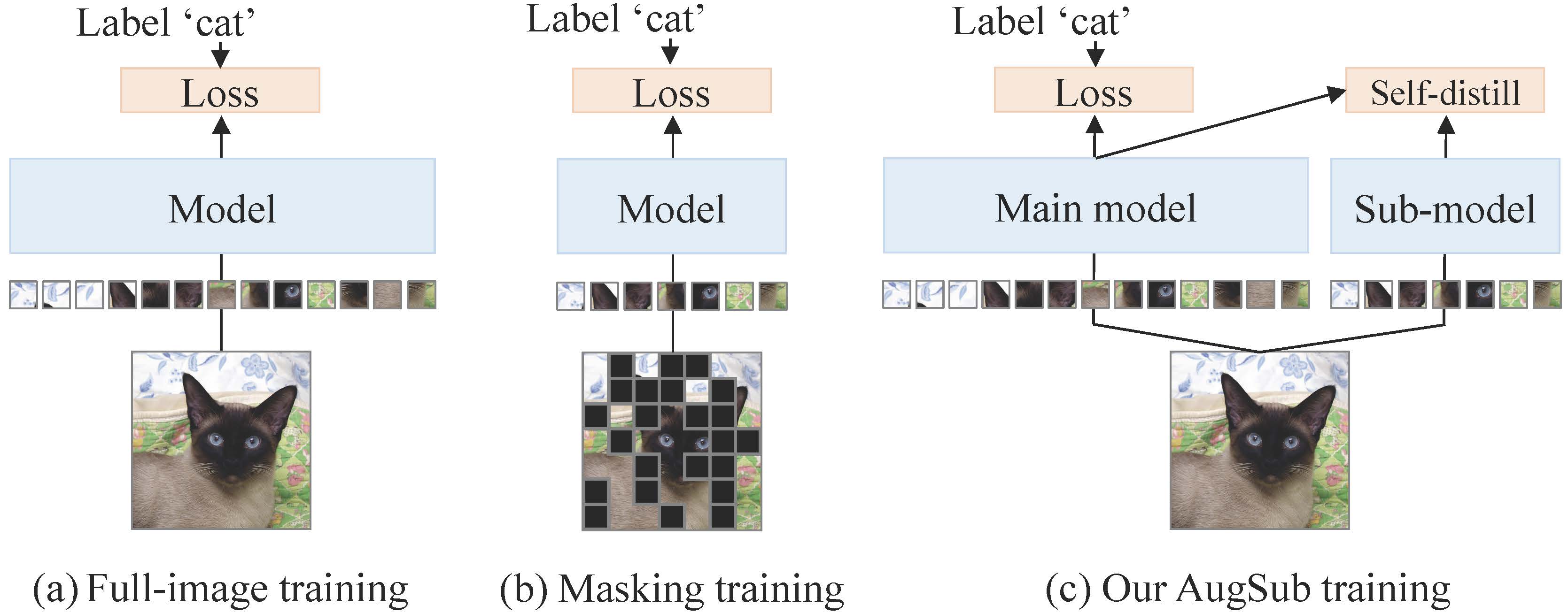

Pre-training using random masking has emerged as a novel trend in training techniques. However, supervised learning faces a challenge in adopting masking augmentations, primarily due to unstable training. In this paper, we propose a novel way to involve masking augmentations dubbed Masked Sub-model (MaskSub). MaskSub consists of the main-model and sub-model; while the former enjoys conventional training recipes, the latter leverages the benefit of strong masking augmentations in training. MaskSub addresses the challenge by mitigating adverse effects through a relaxed loss function similar to a self-distillation loss. Our analysis shows that MaskSub improves performance, with the training loss converging even faster than regular training, which suggests our method facilitates training. We further validate MaskSub across diverse training recipes and models, including DeiT-III, MAE fine-tuning, CLIP fine-tuning, ResNet, and Swin Transformer. Our results show that MaskSub consistently provides significant performance gains across all the cases. MaskSub provides a practical and effective solution for introducing additional regularization under various training recipes. Code available at https://github.com/naver-ai/augsub

PDF AbstractCode

Results from the Paper

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Image Classification | ImageNet | ViT-H @224 (DeiT-III + AugSub) | Top 1 Accuracy | 85.7% | # 200 | |

| Number of params | 632M | # 942 | ||||

| Image Classification | ImageNet | ViT-L @224 (DeiT-III + AugSub) | Top 1 Accuracy | 85.3% | # 231 | |

| Number of params | 304M | # 913 | ||||

| Image Classification | ImageNet | ViT-B @224 (DeiT-III + AugSub) | Top 1 Accuracy | 84.2% | # 313 | |

| Number of params | 86.6M | # 821 | ||||

| Self-Supervised Image Classification | ImageNet (finetuned) | MAE + AugSub finetune (ViT-H/14) | Number of Params | 632M | # 7 | |

| Top 1 Accuracy | 87.2% | # 9 | ||||

| Self-Supervised Image Classification | ImageNet (finetuned) | MAE + AugSub finetune (ViT-B/16) | Number of Params | 87M | # 34 | |

| Top 1 Accuracy | 83.9% | # 40 | ||||

| Self-Supervised Image Classification | ImageNet (finetuned) | MAE + AugSub finetune (ViT-L/16) | Number of Params | 304M | # 23 | |

| Top 1 Accuracy | 86.1% | # 17 |

CIFAR-10

CIFAR-10

ImageNet

ImageNet

MS COCO

MS COCO

CIFAR-100

CIFAR-100

GLUE

GLUE

Oxford 102 Flower

Oxford 102 Flower

ADE20K

ADE20K

Stanford Cars

Stanford Cars

iNaturalist

iNaturalist